Grafana Alert functionality detects whether monitored metrics meet defined threshold conditions, based on configured monitoring. It helps notify users and quickly identify or resolve issues, ensuring your cluster remains secure and stable.

This section describes how to deploy and manage alert components. YMatrix supports alerting for the following metrics:

Four alert notification methods are available. You may choose one or combine multiple methods as needed:

To use alerting, you must first deploy and enable monitoring. For details, see: Grafana Installation and Management

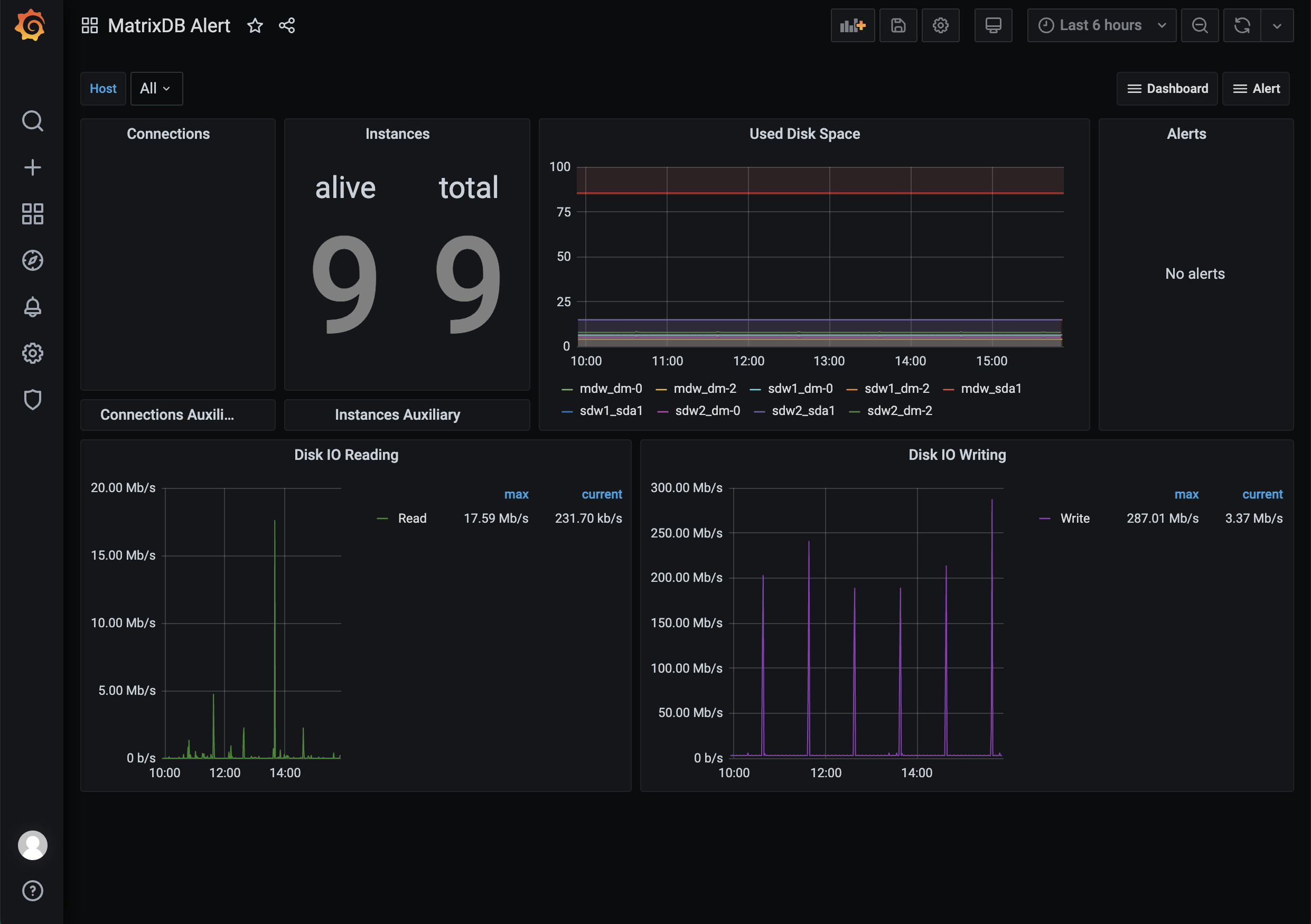

After deployment, you will see the following interface:

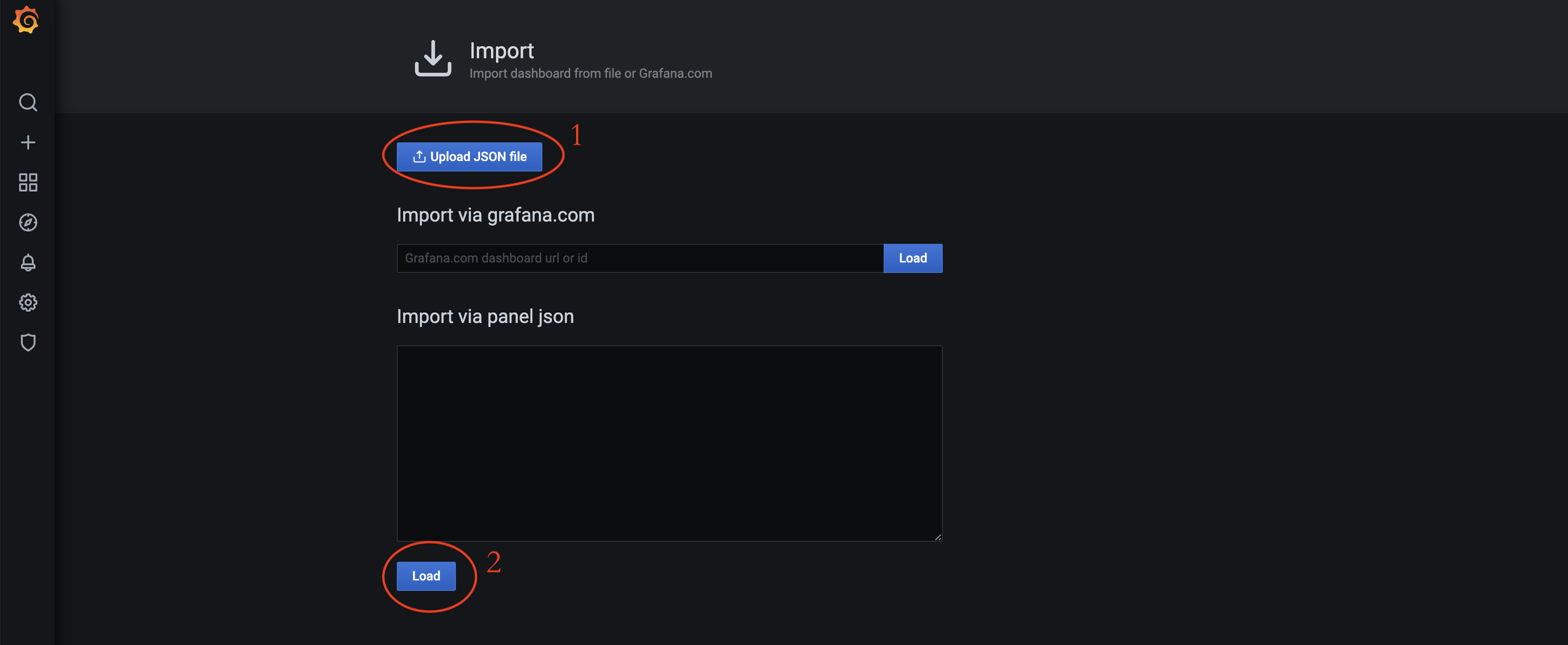

Before importing predefined alert panels, copy the alert.json file from the server to your local machine. Similar to importing dashboard.json and database.json in Grafana, the process involves locating the file on the server, copying it, and uploading it locally. Follow these steps:

Log in to the server and switch to the mxadmin user. Locate the alert.json file at the path shown in the image. You can use the cd command, find command, or any other method you prefer.

[mxadmin@mdw ~]$ cd /opt/ymatrix/matrixdb5/share/doc/postgresql/extension

##or

[mxadmin@mdw ~]$ find /opt/ymatrix/matrixdb5/share/doc/postgresql/extension -name alert.jsonNext, use the scp command to copy the file to your local machine. Permission issues may arise. Consider copying the file to the shared /tmp/ directory first, then transferring it from /tmp/ to your local machine.

Note!

When copying from/tmp/, ensure you switch users to avoid permission issues.

[mxadmin@mdw]$ scp mxadmin@<server IP address>:/opt/ymatrix/matrixdb5/share/doc/postgresql/extension/"alert.json" mxadmin@<server IP address>:/tmp/

~ scp waq@<server IP address>:/tmp/"alert.json" /Users/akkepler/workplace/GrafanaFinally, open your local folder or use the command line to verify the file was copied successfully. After confirmation, modify all instances of the variable $host in the local alert.json file to include all actual hostnames in your cluster. For example, if your cluster consists of one master and two segments named mdw, sdw1, and sdw2, replace every $host with 'mdw', 'sdw1', 'sdw2'. After modification, import the file into the Grafana interface.

Note!

Thedashboard.jsonanddatabase.jsonfiles uploaded to your Grafana interface must also have their variables modified. These files must be uploaded beforealert.json. Indashboard.json, replace all${cluster}withlocaland$hostwith actual hostnames. Indatabase.json, only replace${cluster}withlocal.

Select the alert.json file located at /opt/ymatrix/matrixdb5/share/doc/postgresql/extension.

The alert panel functions the same as the monitoring panel. You can switch between panels using the dropdown menu located in the upper-right corner of each panel.

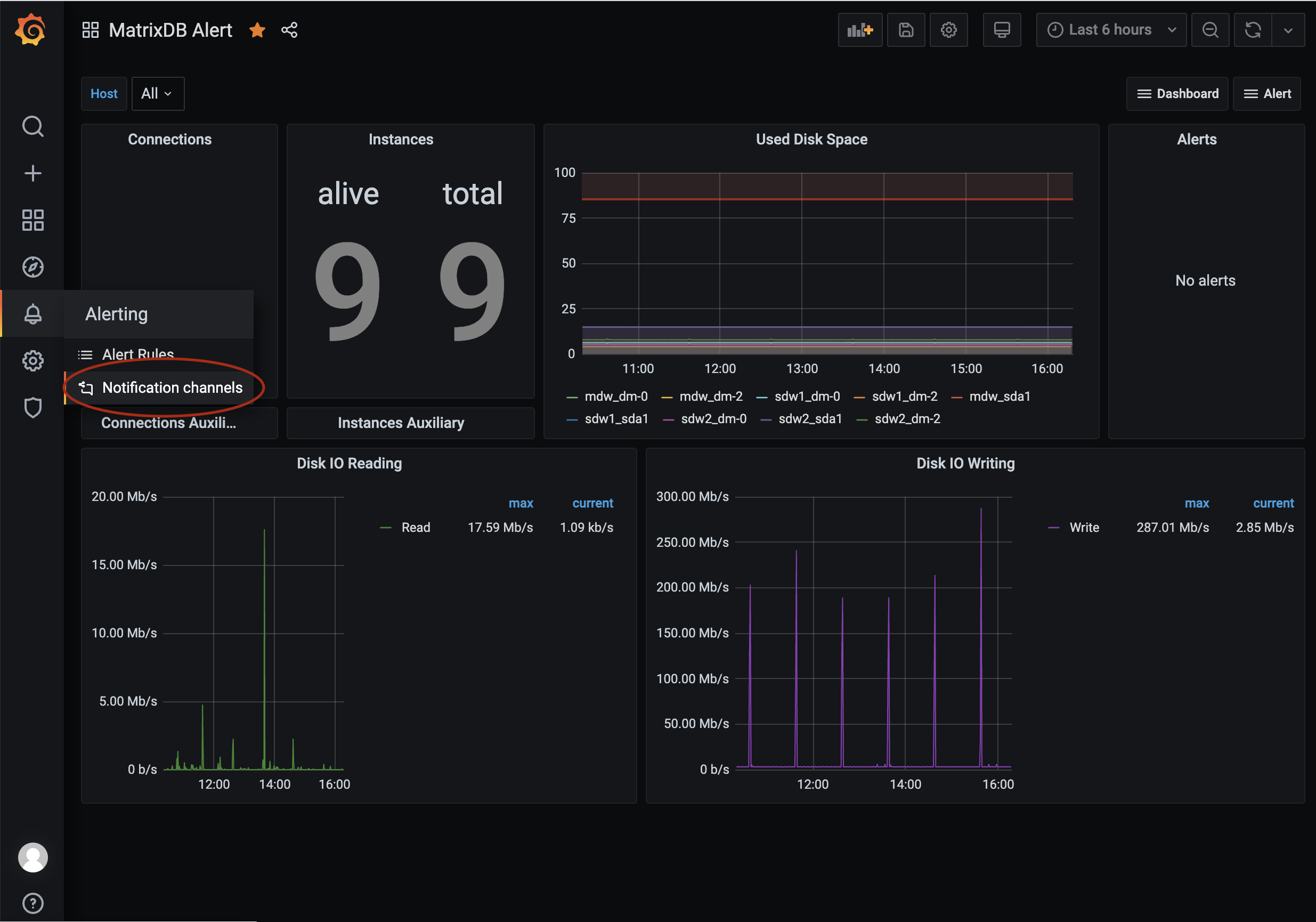

First, configure Notification Channels. Access the entry point as shown below:

YMatrix provides built-in SMS alerting via Alibaba Cloud SMS service—recommended for use. When an alert is triggered, SMS messages are sent to configured phone numbers. Batch sending is supported. Before using, apply for and configure Alibaba Cloud SMS service. For details, refer to:

Alibaba Cloud Documentation: Quick Start for Domestic SMS

Alternatively, you can configure a Webhook for SMS alerts. This requires custom programming of the Webhook logic. For details, see Grafana Alert Documentation: Webhook Notifications

Create an alert.yaml file under /etc/matrixdb/:

# aliyun service config

aliyun_service:

access_key_id: "your access_key_id"

access_key_secret: " access_key_secret"

signature: "signature"

sms_template_code: "SMS_123445678"Here:

access_key_id and access_key_secret are issued upon activation of Alibaba Cloud services.signature is the approved signature text registered in the Alibaba Cloud SMS console.sms_template_code is the template ID starting with "SMS_", registered and approved in the Alibaba Cloud SMS console.${name} as the variable for the alert message.Example template:

Dear Administrator, your database system has triggered a ${name} alert. Please log in to view details and take action promptly.As shown:

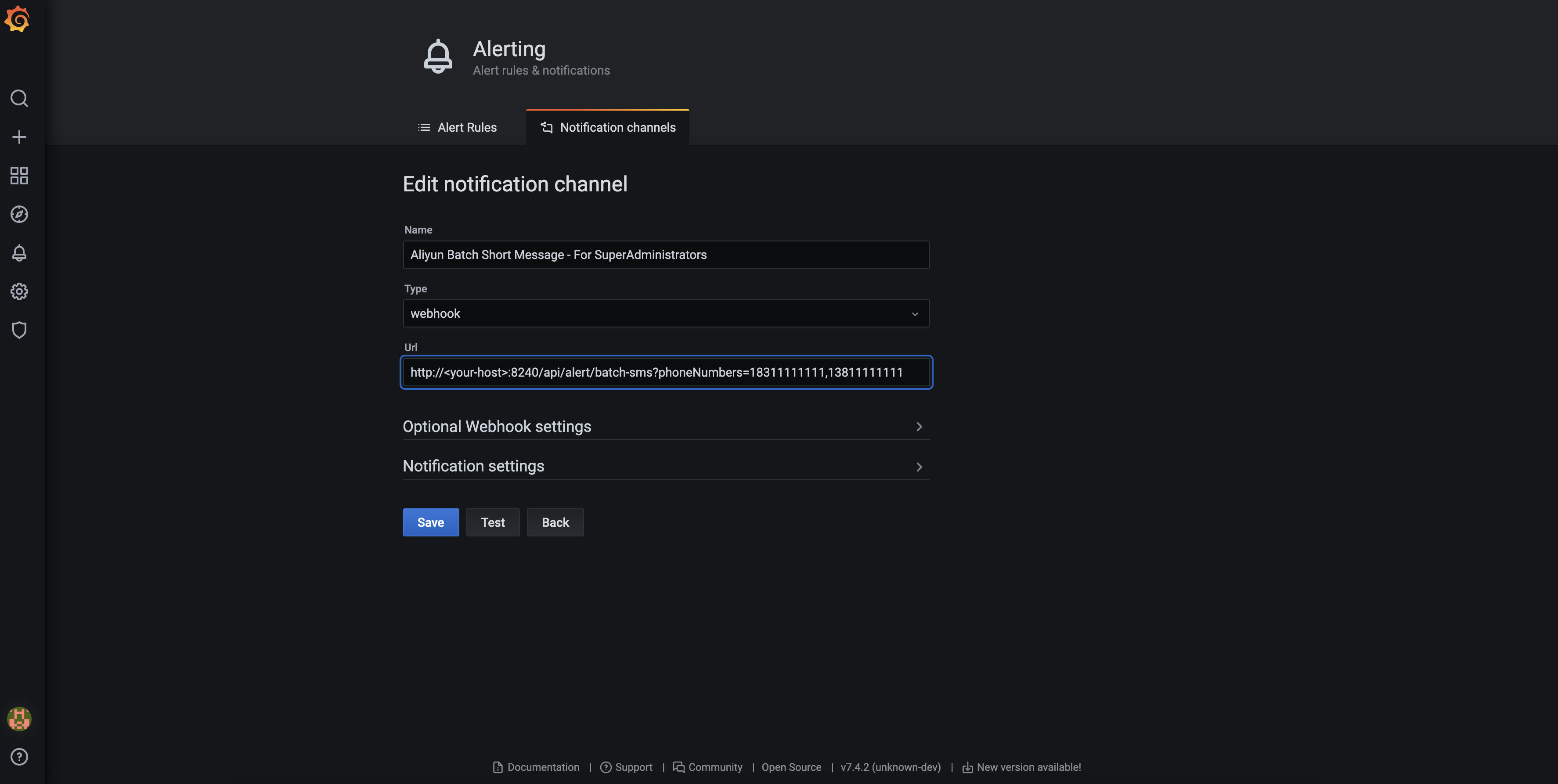

Suggested naming convention for the Channel:

Aliyun Batch Short Message - For SuperAdministrators

"SuperAdministrators" represents a group of recipients. To define another group, create a separate rule. Note that the phoneNumbers parameter is case-sensitive. Multiple numbers can be specified, separated by commas, for batch sending:

http://

: /api/alert/batch-sms?phoneNumbers=18311111111,13811111111

In addition to SMS, YMatrix supports voice call alerts via Alibaba Cloud's voice service. When triggered, calls are made to configured phone numbers. Batch calling is supported. Before use, apply for and configure Alibaba Cloud voice service. For details, see: Alibaba Cloud Documentation: Quick Start for Domestic Voice Service

You may also configure a Webhook for voice alerts, though this requires custom logic implementation. For more information, see Grafana Alert Documentation: Webhook Notifications

Add tts_template_code and region_id to the alert.yaml file under /etc/matrixdb/:

# aliyun service config

aliyun_service:

access_key_id: "your access_key_id"

access_key_secret: " access_key_secret"

signature: "signature"

sms_template_code: "SMS_123445678"

tts_template_code: "TTS_123456788"

region_id: "cn-hangzhou"access_key_id and access_key_secret are shared with the SMS service.tts_template_code starts with "TTS_" and refers to the approved TTS voice template in the Alibaba Cloud console.${name} as the variable for the alert message in the template.Example:

Dear Administrator, your database system has triggered a ${name} alert. Please log in to view details and take action promptly.

Suggested naming convention:

Aliyun Voice Message - For SuperAdministrators

"SuperAdministrators" denotes a recipient group. Define additional rules for other groups. The phoneNumbers parameter is case-sensitive. Multiple numbers can be specified, separated by commas:

http://

: /api/alert/vms?phoneNumbers=18311111111,13811111111

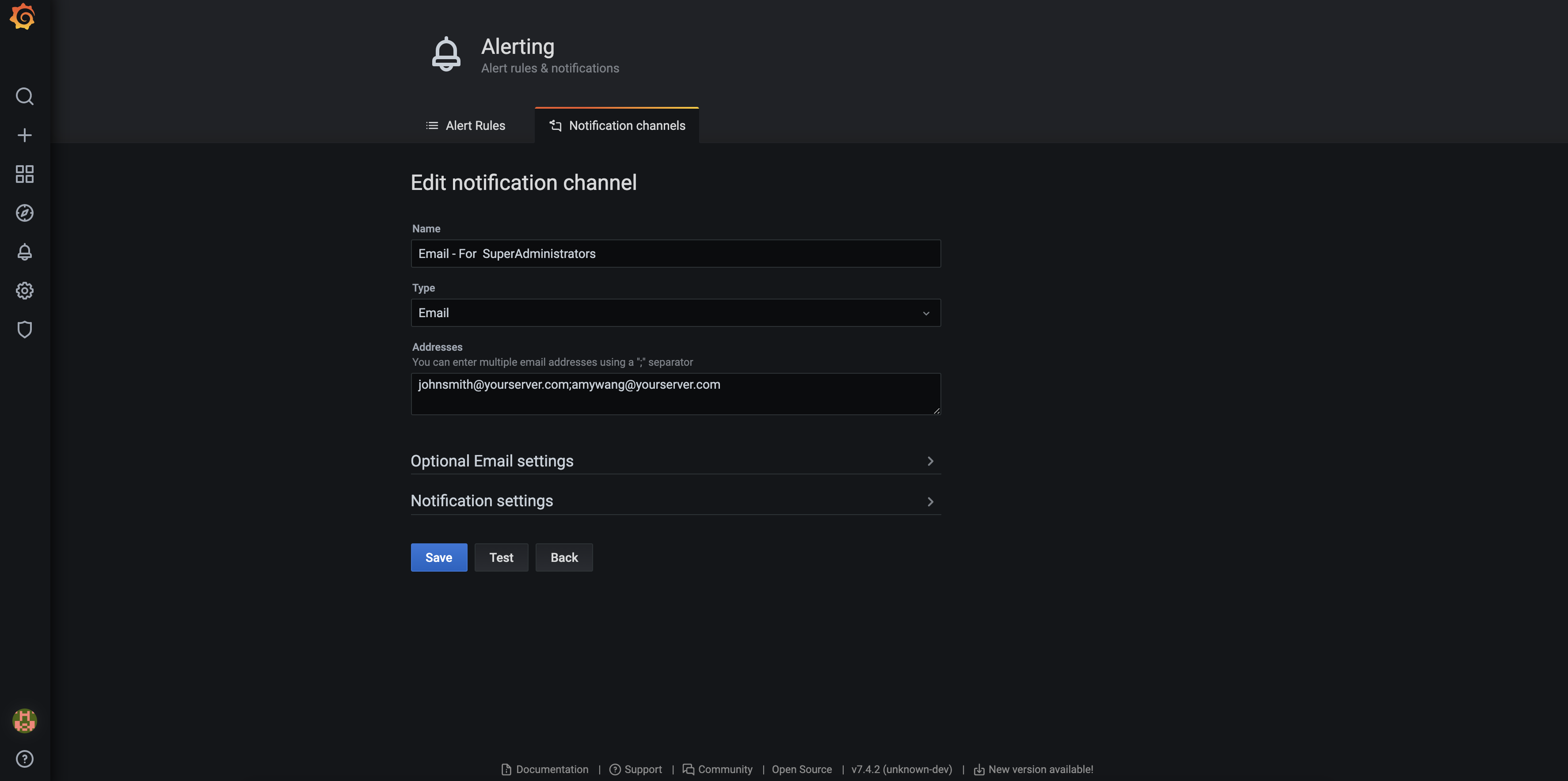

Grafana provides built-in Email alerting. It is simple to configure and easy to use.

Email alerts use Grafana's native SMTP support. Configure the SMTP server in Grafana's configuration file. The file path may vary depending on deployment. Default path on CentOS 7:

/etc/grafana/grafana.ini

For more information, see: Grafana Official Documentation: Configuration

Example configuration:

#################################### SMTP / Emailing #####################

[smtp]

enabled = true

host = <your smtp host>

user = <your user>

# If the password contains # or ; you have to wrap it with triple quotes. Ex """#password;"""

password = <your password>

;cert_file =

;key_file =

skip_verify = true

from_address = <your email address>

from_name = Grafana

;ehlo_identity =

;startTLS_policy =

[emails]

welcome_email_on_sign_up = false

templates_pattern = emails/*.htmlAs shown:

Suggested naming convention:

Email - For SuperAdministrators

"SuperAdministrators" refers to a recipient group. Define additional rules for other groups.

Note!

If configuration fails, check/var/log/grafana/grafana.logfor error details.

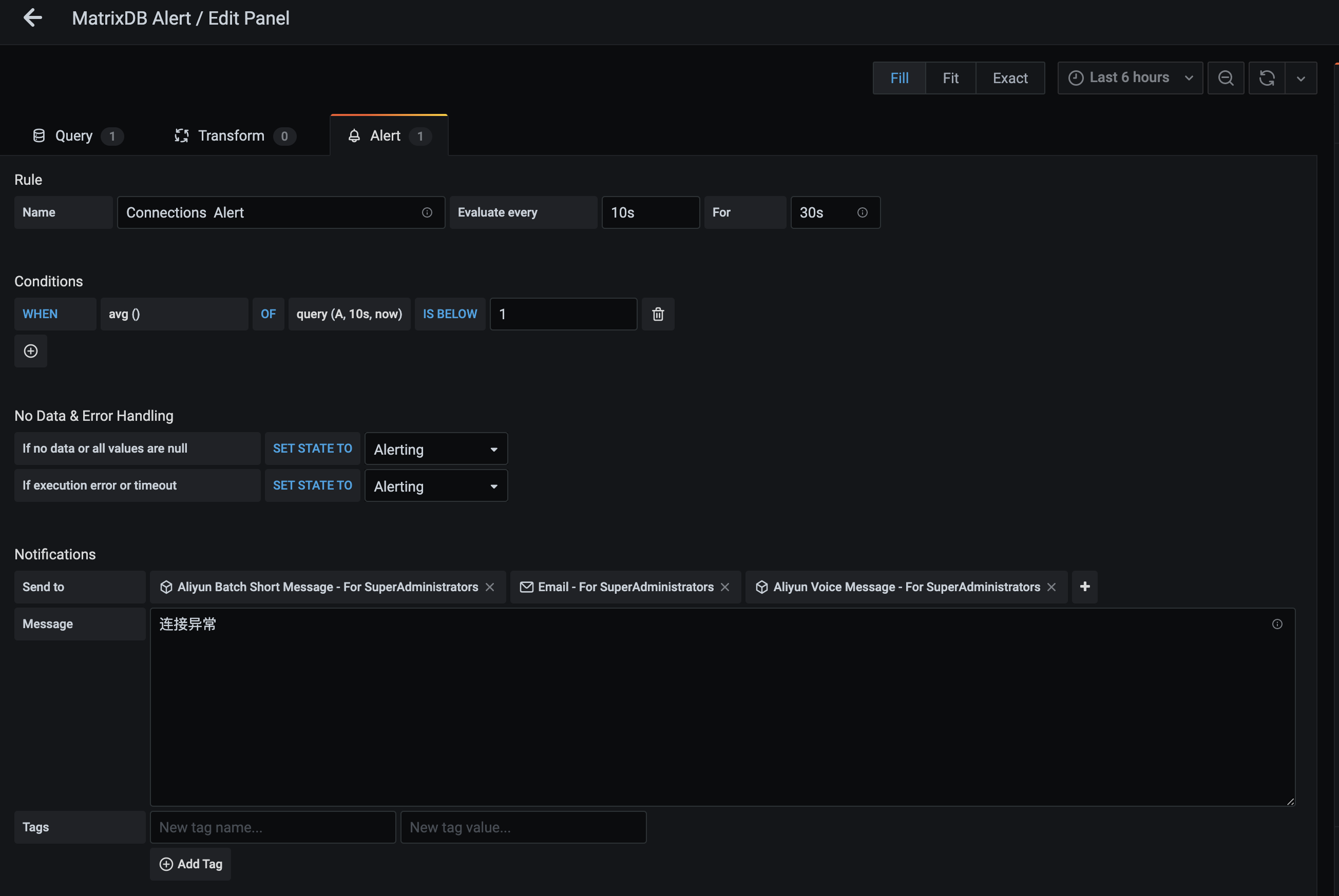

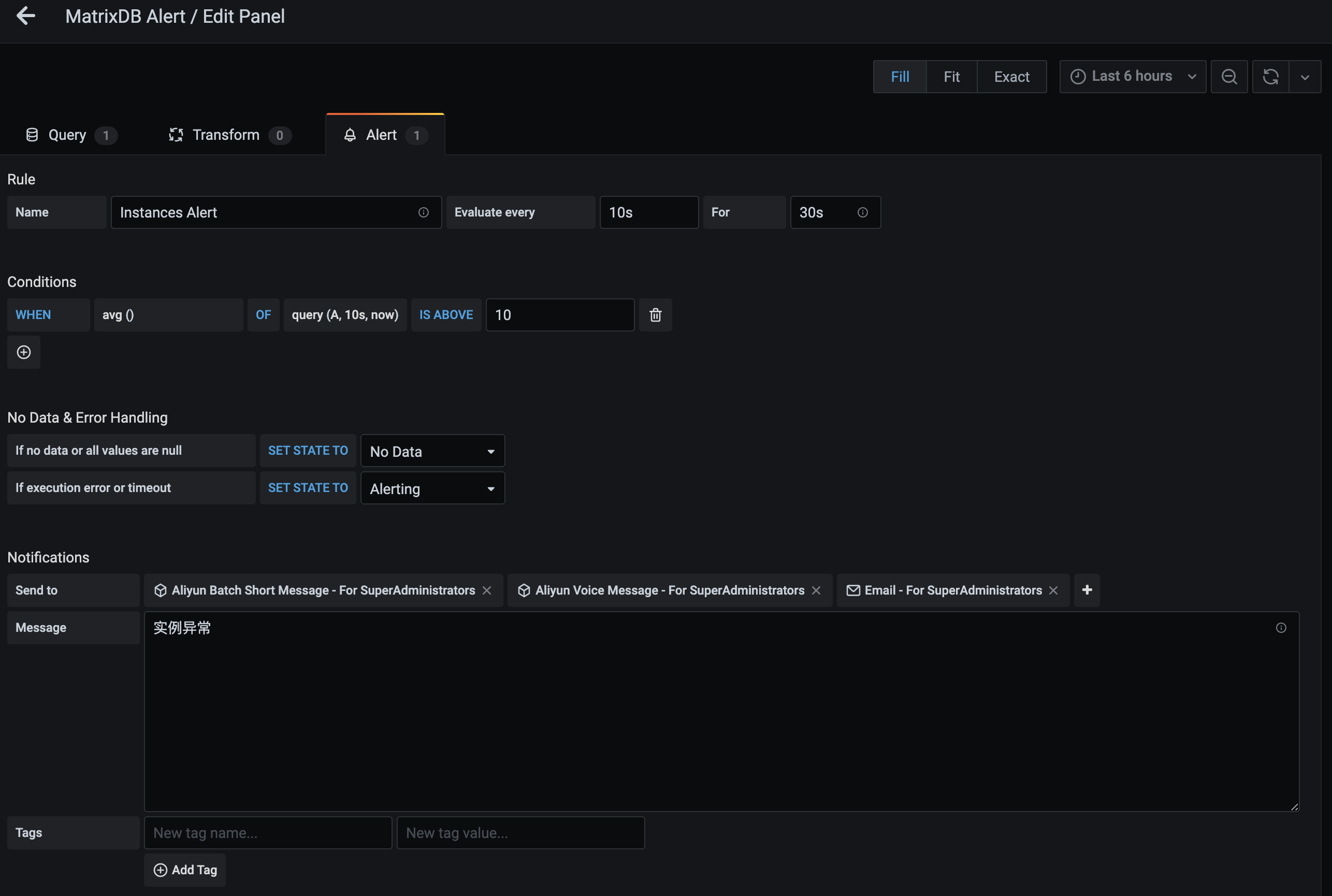

After setting up notification channels, configure alert rules for each monitoring panel.

For details on alert configuration, see: Grafana Official Documentation: Alert

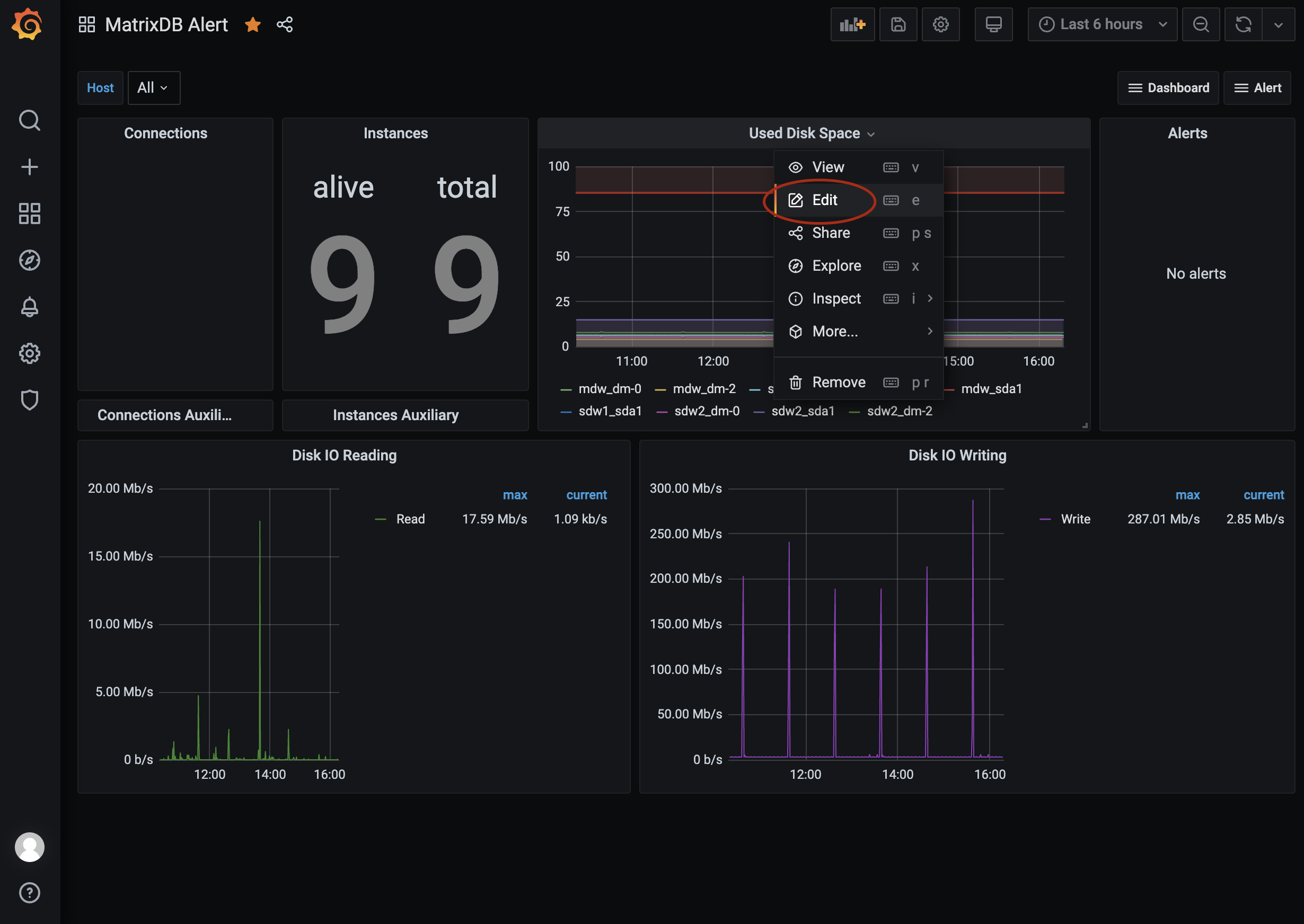

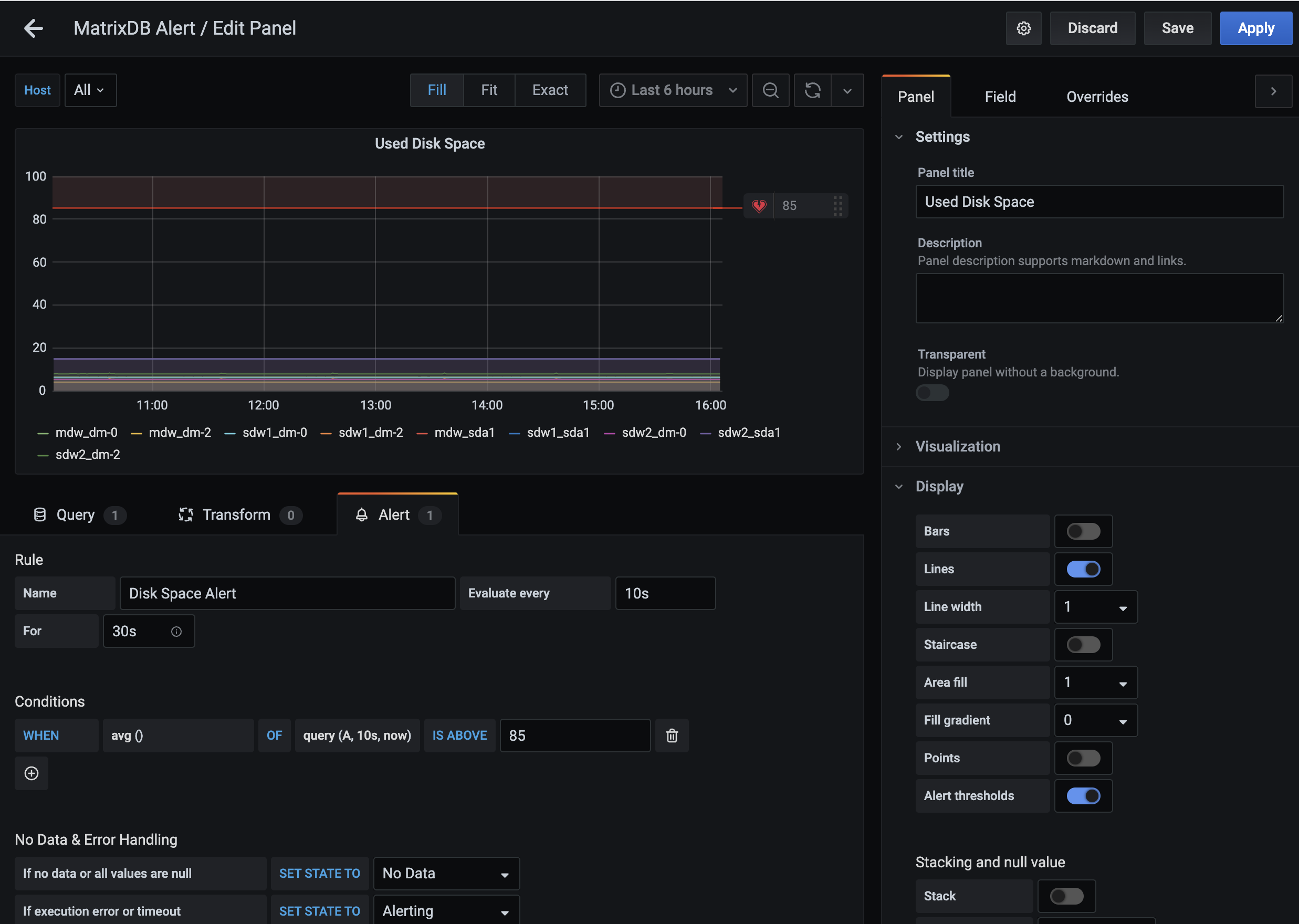

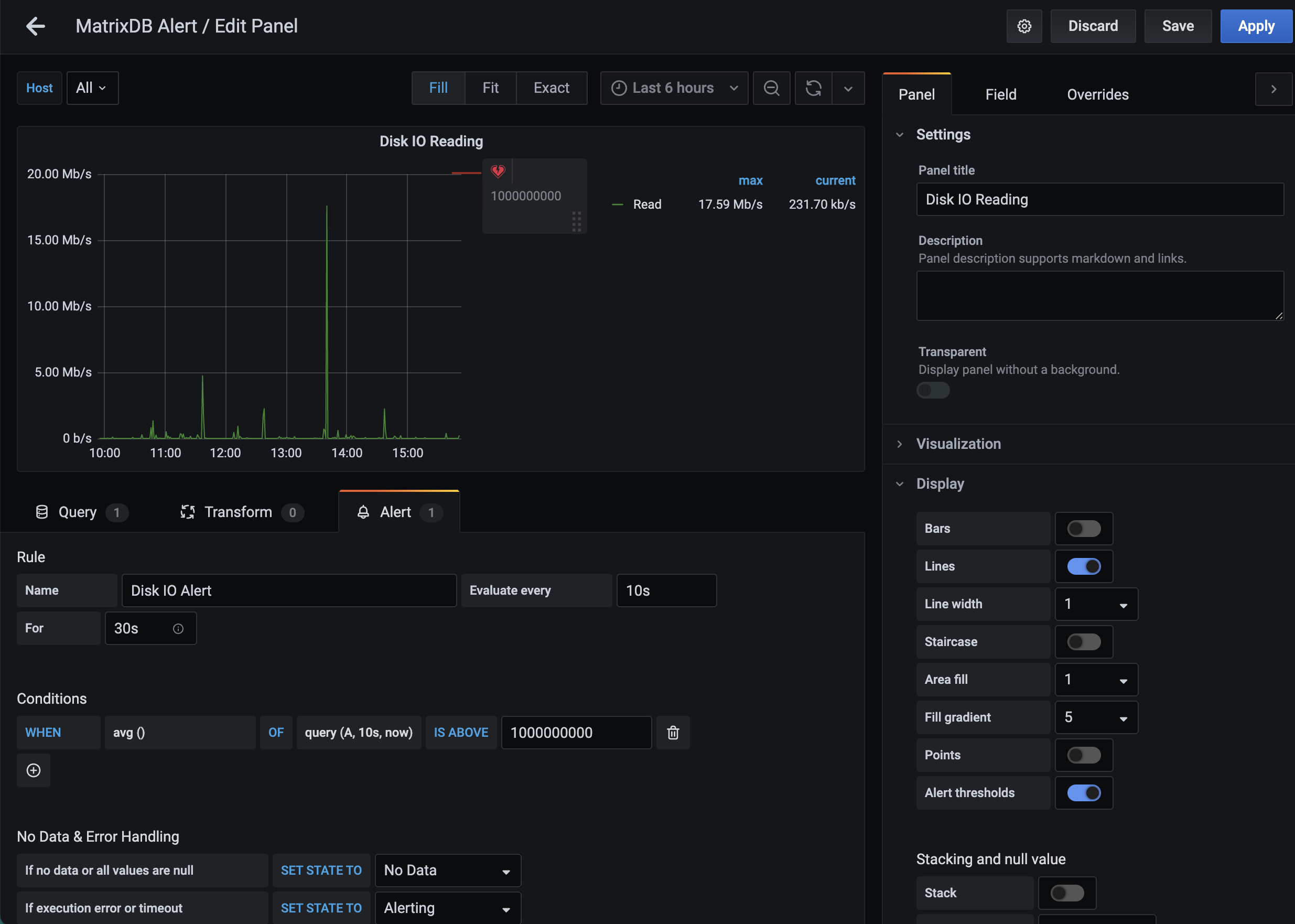

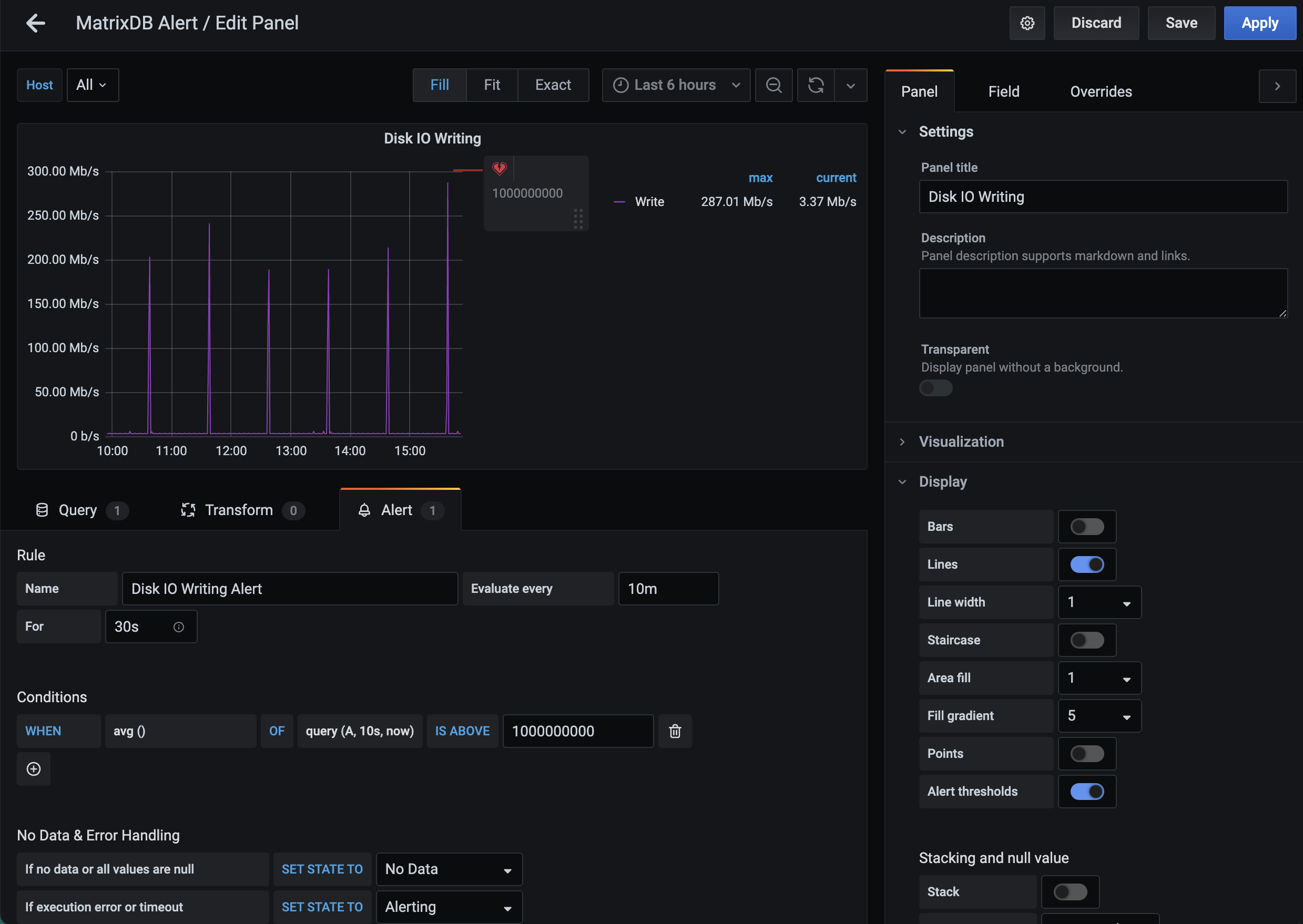

Hover over a specific alert panel name. A dropdown arrow appears on the right. Click "Edit" to enter edit mode.

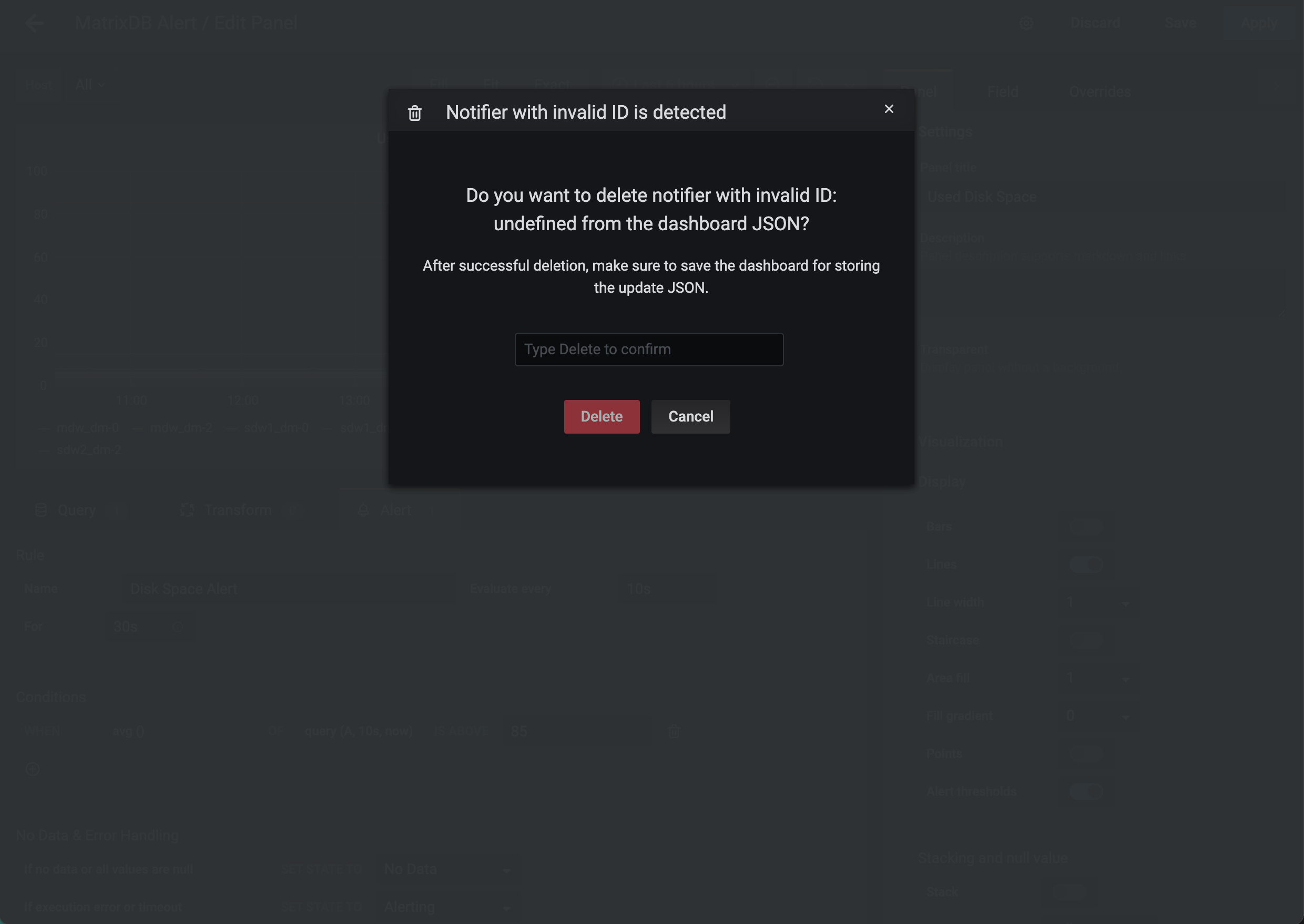

Switch from "Query" to the "Alert" tab under "Edit Panel". If a popup appears saying "Notifier with invalid ID is detected", do not click "Delete"—this may remove the panel. Click "Cancel" and close the window instead.

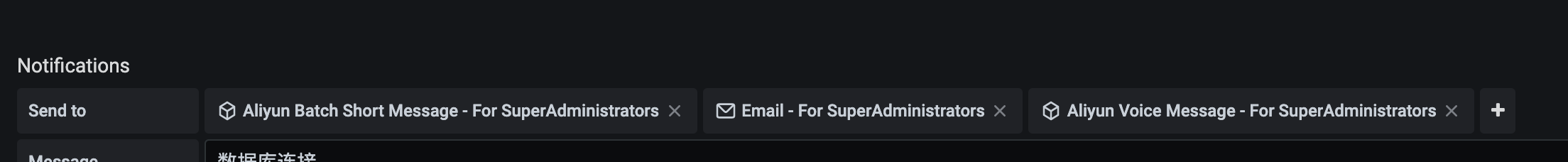

Scroll down to "Notifications". Enter a concise alert message (4–6 words recommended). This message appears in emails and replaces the ${name} variable in SMS and voice templates. Keep it short due to character limits. Select one or more notification channels (configured earlier) under "Send to".

Time-series databases aggregate data over time intervals. "No Data" may occur if no data is collected during the interval—e.g., Grafana time is 5 minutes ahead of system time, aggregating the last minute. Data collection failures can also cause "No Data". This scenario is handled by the "Alerting" function.

As shown:

The red threshold line in "Used Disk Space" alerts indicates the trigger level. The red shaded area exceeds this threshold and signals danger. Default threshold is 85%. You can customize the "IS ABOVE" value as needed.

Disk I/O read and write alerts (Disk IO Reading, Disk IO Writing) are disabled by default, allowing you to set custom thresholds.

Note!

Click "Save" after changes to apply alert settings.

alert.yaml configuration file, run the following commands to apply changes:source /opt/ymatrix/matrixdb5/greenplum_path.sh

supervisorctl stop mxui

supervisorctl start mxui