Quick onboard

Deployment

Data Modeling

Connecting

Migration

Query

Operations and Maintenance

Common Maintenance

Partition

Backup and Restore

Expansion

Mirroring

Resource Management

Security

Monitoring

Performance Tuning

Troubleshooting

Reference Guide

Tool guide

Data type

Storage Engine

Executor

Stream

DR (Disaster Recovery)

Configuration

Index

Extension

SQL Reference

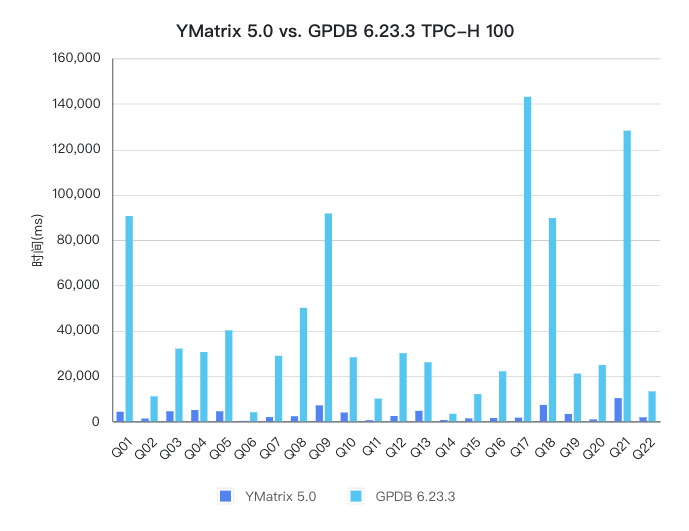

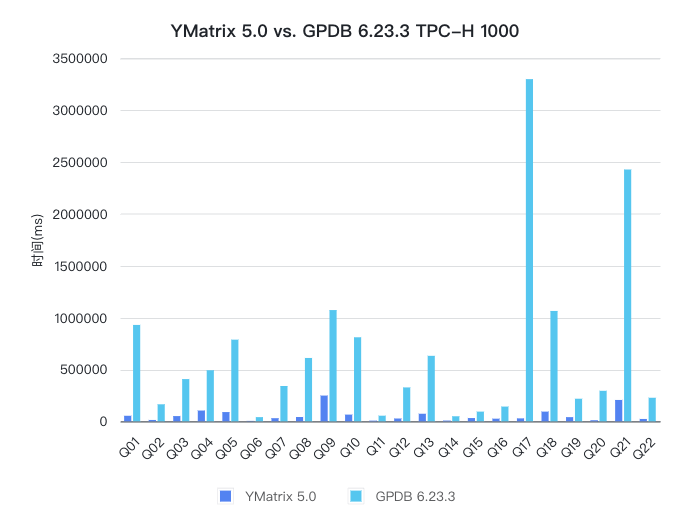

This performance report compares the performance performance of YMatrix and Greenplum (GPDB for short) in TPC-H analytical query scenarios. Test results show that YMatrix performed far better than GPDB in two data scales: 100 and 1000, with an increase of 13 times and 12 times respectively.

TPC-H is a decision support benchmark that contains a set of business-oriented ad hoc queries and concurrent data modifications. The data in the selected queries and databases have broad industry applicability. This benchmark demonstrates the ability of a decision support system to check large amounts of data, execute highly complex queries, and answer key business questions, while reflecting the multifaceted ability of the database system to process queries.

| Data Scale | Machine | vCPU | RAM | Bandwidth | EBS |

|---|---|---|---|---|---|

| 100 | AWS EC2, m5.8xlarge | 32 | 128GB | 10Gbps | gp3, iops = 3000, thoughtput = 125MB/s |

| 1000 | AWS EC2, m5.8xlarge | 32 | 128GB | 10Gbps | io1, iops = 64000 |

YMatrix's TPC-H benchmark test adopts stand-alone deployment and adopts the default deployment method that is automatically selected by YMatrix products. In the hardware environment introduced above, there are 6 Segment nodes.

Operating system kernel: 3.10.0-1160.66.1.el7.x86_64

Operating system version: CentOS Linux release 7.9.2009

YMatrix: Enterprise version of matrixdb5-5.0.0+enterprise_5.0.0, where YMatrix cluster deployment is available in YMatrix official website document and YMatrix's TPC-H Benchmark Tool.

Greenplum: open-source-greenplum-db-6.23.3-rhel7-x86_64

YMatrix and GPDB were tested at 100 times and 1000 times data scales respectively. YMatrix and GPDB were both configured as 6 segments, and all test results were measured by YMatrix engineers using the same machine configuration.

There are differences between 100 times and 1000 times data scale tests as follows:

compresslevel=1; the open source version of GPDB does not support quicklz compression format, so it only uses zstd compression, compresslevel=1.compresslevel=1.statement_mem=1GB is under 100 times the data scale, and statement_mem=2GB is under 1000 times the data scale.TPC-H 100 times the size data:

| table | Records | YMatrix(lz4) | YMatrix(zstd) | GPDB(zstd) |

|---|---|---|---|---|

| nation | 25 | 5 MB | 5 MB | 1 MB |

| region | 5 | 4 MB | 4 MB | 1 MB |

| part | 20,000,000 | 1 GB | 690 MB | 592 MB |

| partsupp | 80,000,000 | 4 GB | 3 GB | 3 GB |

| supplier | 1,000,000 | 97 MB | 70 MB | 55 MB |

| customer | 15,000,000 | 1 GB | 969 MB | 861 MB |

| orders | 150,000,000 | 7 GB | 5 GB | 4 GB |

| lineitem | 600,037,902 | 34 GB | 19 GB | 18 GB |

TPC-H 1000 times the size data:

| table | Records | YMatrix(zstd) | GPDB(zstd) |

|---|---|---|---|

| nation | 25 | 5 MB | 1 MB |

| region | 5 | 4 MB | 1 MB |

| part | 200,000,000 | 5 GB | 5 GB |

| partsupp | 800,000,000 | 29 GB | 31 GB |

| supplier | 10,000,000 | 616 MB | 538 MB |

| customer | 150,000,000 | 8 GB | 8 GB |

| orders | 1,500,000,000 | 46 GB | 46 GB |

| lineitem | 5,999,989,709 | 185 GB | 184 GB |

Prepare for the test environment

gp_vmem_protect_limit and statement_mem of the database reasonably according to the server hardware environment.Download the TPC-H benchmark tool

git clone https://github.com/ymatrix-data/TPC-H.gitNotes!

YMatrix's TPC-H tool is open source and easy to use, and you are welcome to try it out.

Execute the tpch.sh script

export PGPORT=5432

export PGDATABASE=tpch_s100tpch.sh script to generate the tpch_variable.sh configuration file. The -d parameter can select the database type, such as matrixdb, greenplum, postgresql; the -s parameter can specify the data size. ./tpch.sh -d matrixdb -s 100tpch.sh with one click. The script will automatically generate data, create tables, load data, and execute all TPC-H queries, and generate the query execution time. ./tpch.shNotes!

When thetpch.shscript executes data loading, YMatrix uses the loading method based on the MatrixGate tool, and the loading method based on the gpfdist tool for GPDB.

You can implement your specific needs by customizing the tpch_variable.sh configuration file:

RUN_GEN_DATA="true" to indicate the data generated.RUN_DDL="true", which means to create tables and indexes.RUN_LOAD="true" to indicate loading data.RUN_SQL="true" to execute all TPC-H queries.PREHEATING_DATA="true", indicating that the data file needs to be cached in the warm-up cycle.SINGLE_USER_ITERATIONS="2". If the above configuration item PREHEATING_DATA="true", it means that the TPC-H query will be executed 3 times, and there is a preheating result once, excluding the preheating result, and the minimum value of the last two results will be taken.Example:

If you repeat the TPC-H query, you only need to modify the following configuration items of the tpch_variable.sh configuration file:

`RUN_COMPILE_TPCH="false"`

`RUN_GEN_DATA="false"`

`RUN_INIT="false"`

`RUN_LOAD="false"`

`RUN_SQL="true"`

`RUN_SINGLE_USER_REPORT="true"`Then execute tpch.sh again.

YMatrix and GPDB were tested in multiple groups respectively, and the performance comparison was performed using their best results.

The performance data of YMatrix's TPC-H 100 adopts lz4 compression format, and the performance data of GPDB's TPC-H 100 adopts zstd compression, compresslevel=1. Because open source GPDB does not support quicklz compression format, zstd compression can only be used for comparison at present;

The TPC-H 1000 performance data of YMatrix and GPDB are both zstd compression, compresslevel=1.

In terms of performance comparison, YMatrix's performance is far better than GPDB, and both TPC-H 100 and 1000 are more than 10 times faster than GPDB.

| Data Scale | GPDB (ms) | YMatrix (ms) | Increase ratio |

|---|---|---|---|

| 100 | 930,071 | 70,044 | 1300% |

| 1000 | 15,273,254 | 1,265,478 | 1200% |

Below are the detailed test results. YMatrix TPC-H 100 was tested in two compression formats: lz4 and zstd, respectively. Among them, lz4 has better performance because the server memory used in this round of tests is large enough to cache all data files, so the compression rate is lower, but the lz4 compression format with better decompression performance can achieve better performance.

GPDB was tested in the scenarios where orca is turned on and off, and found that the performance of orca will be worse in the scenarios where orca will be TPC-H 1000, and the performance of orca will be better in the scenarios where orca will be better. In addition, YMatrix's tests are all conducted in the scenario of orca being turned off.

| (ms) | YMatrix TPC-H 100 planner lz4 | YMatrix TPC-H 100 planner zstd | GPDB TPC-H 100 orca zstd | GPDB TPC-H 1000 planner zstd | YMatrix TPC-H 1000 planner zstd | GPDB TPC-H 1000 orca zstd | GPDB TPC-H 1000 planner zstd | ||

|---|---|---|---|---|---|---|---|---|---|

| Q01 | 4,200 | 4,846 | 94,271 | 90,473 | 53,291 | 929,166 | 907,474 | ||

| Q02 | 1,228 | 1,417 | 13,134 | 11,005 | 12,960 | 163,967 | 132,898 | ||

| Q03 | 4,409 | 4,860 | 31,654 | 32,057 | 50,194 | 406,933 | 456,339 | ||

| Q04 | 4,965 | 4,947 | 40,743 | 30,522 | 103,699 | 492,440 | 429,417 | ||

| Q05 | 4,405 | 5,226 | 43,100 | 40,094 | 88,930 | 787,161 | 569,668 | ||

| Q06 | 183 | 254 | 4,066 | 3,995 | 2,852 | 40,141 | 38,985 | ||

| Q07 | 1,865 | 2,219 | 29,294 | 28,879 | 29,921 | 340,402 | 402,481 | ||

| Q08 | 2,239 | 3,123 | 51,852 | 49,998 | 41,305 | 610,720 | 650,542 | ||

| Q09 | 7,012 | 8,229 | 84,506 | 91,597 | 248,033 | 1,072,529 | 1,719,890 | ||

| Q10 | 3,861 | 4,469 | 61,953 | 28,238 | 64,568 | 810,094 | 395,927 | ||

| Q11 | 470 | 569 | 5,937 | 10,010 | 6,475 | 54,006 | 97,012 | ||

| Q12 | 2,319 | 2,486 | 27,271 | 30,032 | 26,964 | 326,579 | 335,811 | ||

| Q13 | 4,610 | 4,458 | 34,345 | 26,018 | 72,861 | 631,285 | 651,340 | ||

| Q14 | 588 | 696 | 5,591 | 3,318 | 7,277 | 48,476 | 47,320 | ||

| Q15 | 1,310 | 1,249 | 9,579 | 12,001 | 31,236 | 93,387 | 172,448 | ||

| Q16 | 1,471 | 1,584 | 8,493 | 22,038 | 25,295 | 141,958 | 492,614 | ||

| Q17 | 1,613 | 1,960 | 154,488 | 143,057 | 28,158 | 3,299,179 | 3,272,970 | ||

| Q18 | 7,225 | 6,950 | 78,451 | 89,587 | 93,391 | 1,064,011 | 1,276,977 | ||

| Q19 | 3,225 | 4,173 | 22,224 | 21,027 | 40,080 | 217,796 | 208,500 | ||

| Q20 | 850 | 1,004 | 24,920 | 24,818 | 9,596 | 293,892 | 421,818 | ||

| Q21 | 10,219 | 10,529 | 149,483 | 128,112 | 205,788 | 2,427,732 | 2,420,413 | ||

| Q22 | 1,777 | 1,858 | 19,866 | 13,196 | 22,603 | 226,963 | 172,399 | ||

| SUM | 70,044 | 77,107 | 995,221 | 930,071 | 1,265,478 | 14,478,829 | 15,273,254 |