| Operating System | Supported CPU Architecture |

|---|---|

| CentOS 8 | x86_64 |

| Red Hat 8 | x86_64 |

The server installation process includes 5 steps: viewing the basic server information, installation preparation, database RPM package installation, database deployment and post-installation settings.

Before performing the installation operation, check the basic server information first. It is rarely a good habit to understand that a server helps you better plan and deploy a cluster.

| Step | Commands | Purpose |

|---|---|---|

| 1 | free -h | View operating system memory information |

| 2 | df -h | View disk space |

| 3 | lscpu | View CPU quantity |

| 4 | cat /etc/system-release | View operating system version information |

| 5 | uname -a | Out all kernel information in the following order (where the detection results of -p and -i are omitted if they are agnostic): kernel name; host name on network node; kernel issue number; kernel version; host hardware architecture name; processor type (not portable); hardware platform (not portable); operating system name |

| 6 | tail -11 /proc/cpuinfo | View CPU information |

CentOS 8 and Red Hat 8 operating system use the new generation of package manager dnf by default.

The EPEL (Extra Packages for Enterprise Linux) repository provides some additional packages not included in the standard Red Hat and CentOS repository. The PowerTools repository acts as a container for libraries and developer tools. It is available on the RHEL/CentOS operating system, but is not enabled by default. The EPEL package depends on the PowerTools package. If the EPEL repository is enabled, PowerTools should be installed on the system.

Under root user or use root permissions to perform the following steps.

# dnf -y install epel-release && \

dnf -y install 'dnf-command(config-manager)' && \

dnf config-manager --set-enabled powertools && \

dnf config-manager --set-enabled epel && \

dnf -y updateInstall the necessary dependencies under the root user or using root permissions.

# dnf install -y libicu python3-devel python3-pip openssl-devel openssh-server net-toolsCopy the upcoming RPM packages to all nodes from locally.

~ scp <local path> <username>@<Server IP address>: <server path>Turn off the firewall:

# systemctl stop firewalld.service

# systemctl disable firewalld.serviceClose SELinux, edit /etc/selinux/config, and set the value of SELINUX to disabled:

# sed s/^SELINUX=.*$/SELINUX=disabled/ -i /etc/selinux/config

setenforce 0Close the sssd service:

# systemctl stop sssd

# systemctl stop sssd-kcm.socketMake sure that there are persistent host names on all nodes. If they do not exist, please use the following command to set the host name. For example, you can set it in the master node like this:

# hostnamectl set-hostname mdwThe two child nodes also set corresponding host names:

# hostnamectl set-hostname sdw1# hostnamectl set-hostname sdw2Ensure that all nodes in the cluster can access each other through hostname and IP. Add a record in /etc/hosts and map the host name to a local network card address. For example, the /etc/hosts of the three nodes contain something like this:

192.168.100.10 mdw

192.168.100.11 sdw1

192.168.100.12 sdw2Install the YMatrix RPM package under the root user or with root permissions.

# dnf install -y matrixdb5_5.0.0+enterprise-1.el8.x86_64.rpmNotes!

During the actual installation process, please replace the file name with the latest downloaded RPM package name.

After the installation is successful, the supervisor and MXUI processes will be automatically started. These background processes are used to provide graphic operation interfaces and process management services.

If you have the requirement to configure ports, modify the /etc/matrixdb5/defaults.conf file for configuration after installing the RPM package. This operation is only done on the Master.

# vim /etc/matrixdb5/defaults.confThe graphic deployment provided by YMatrix is still used here. Remote graphic deployment requires server ports 8240 and 4617 to be accessible. After the installation is complete, these ports of all nodes will be opened by default. The graphic UI service is provided by the MXUI process.

Notes!

You cannot deploy YMatrix using a graphic interface, please refer to Command Line Deployment.

Use your browser to access the following graphic installation wizard URL, which is the IP of the mdw server:

http://<IP>:8240/On the first page of the installation wizard, you need to fill in the super user password and use the sudo more /etc/matrixdb5/auth.conf command to view it.

Select "Multi-node deployment" on the second page and click Next.

Next, start the four steps of multi-machine deployment.

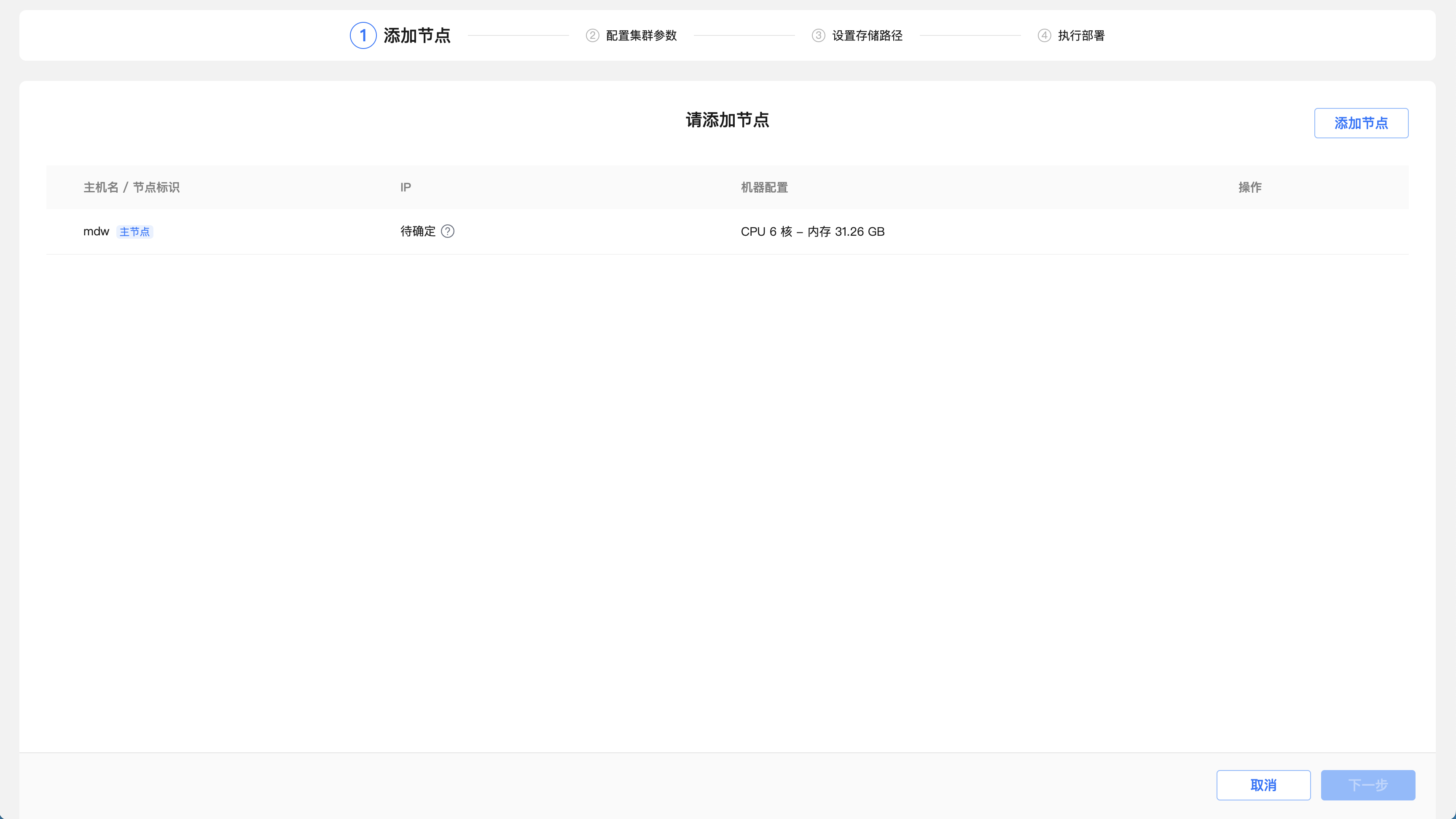

The first step is to add a node and click the "Add Node" button.

Enter the IP addresses or hostname or FQDN of sdw1 and sdw2 in the text box, click "OK", and click "Next".

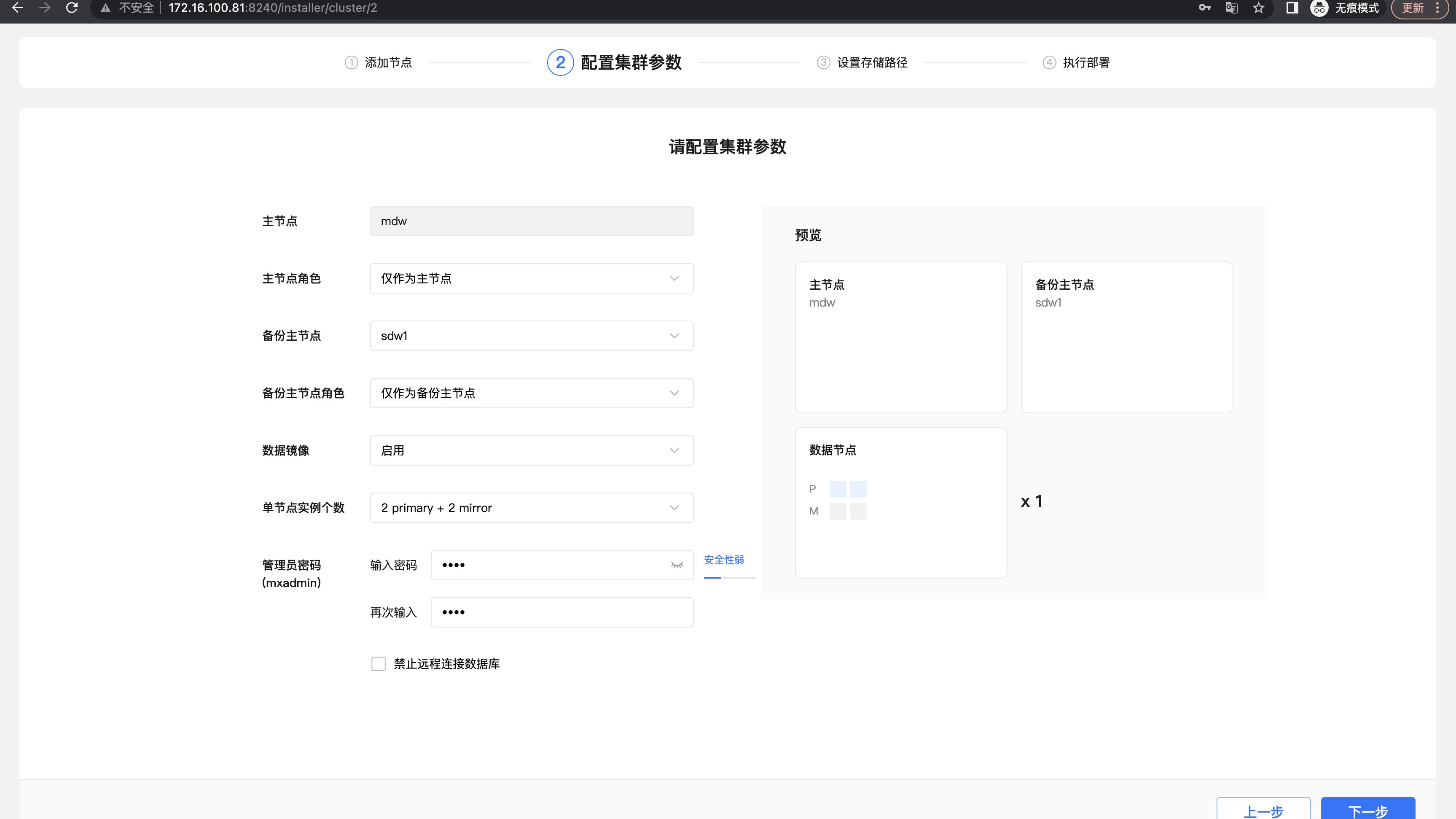

The second step is to configure cluster parameters. "Data mirroring" determines whether the cluster data node contains backup images. It is recommended to enable it in the production environment so that the cluster is highly available. The system automatically recommends the largest space of disks and the number of segments matching the system resources, which can be adjusted according to the specific usage scenario. The configured cluster structure can be viewed through the schematic diagram. After confirming, click "Next".

The third step is to set the storage path.

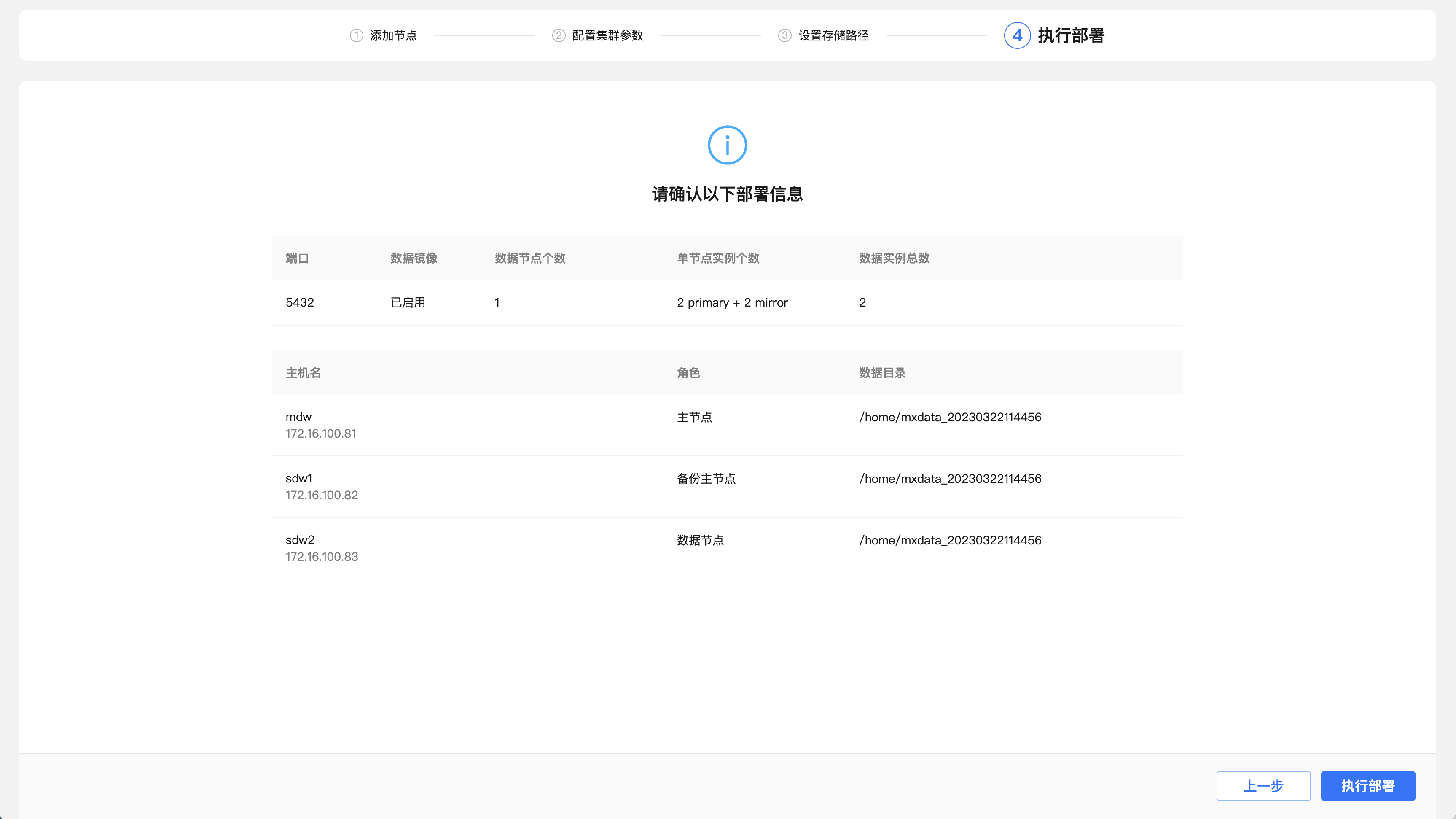

Step 4: Execute deployment. This step will list the configuration parameters for the previous operation. After confirming that it is correct, click "Execute deployment".

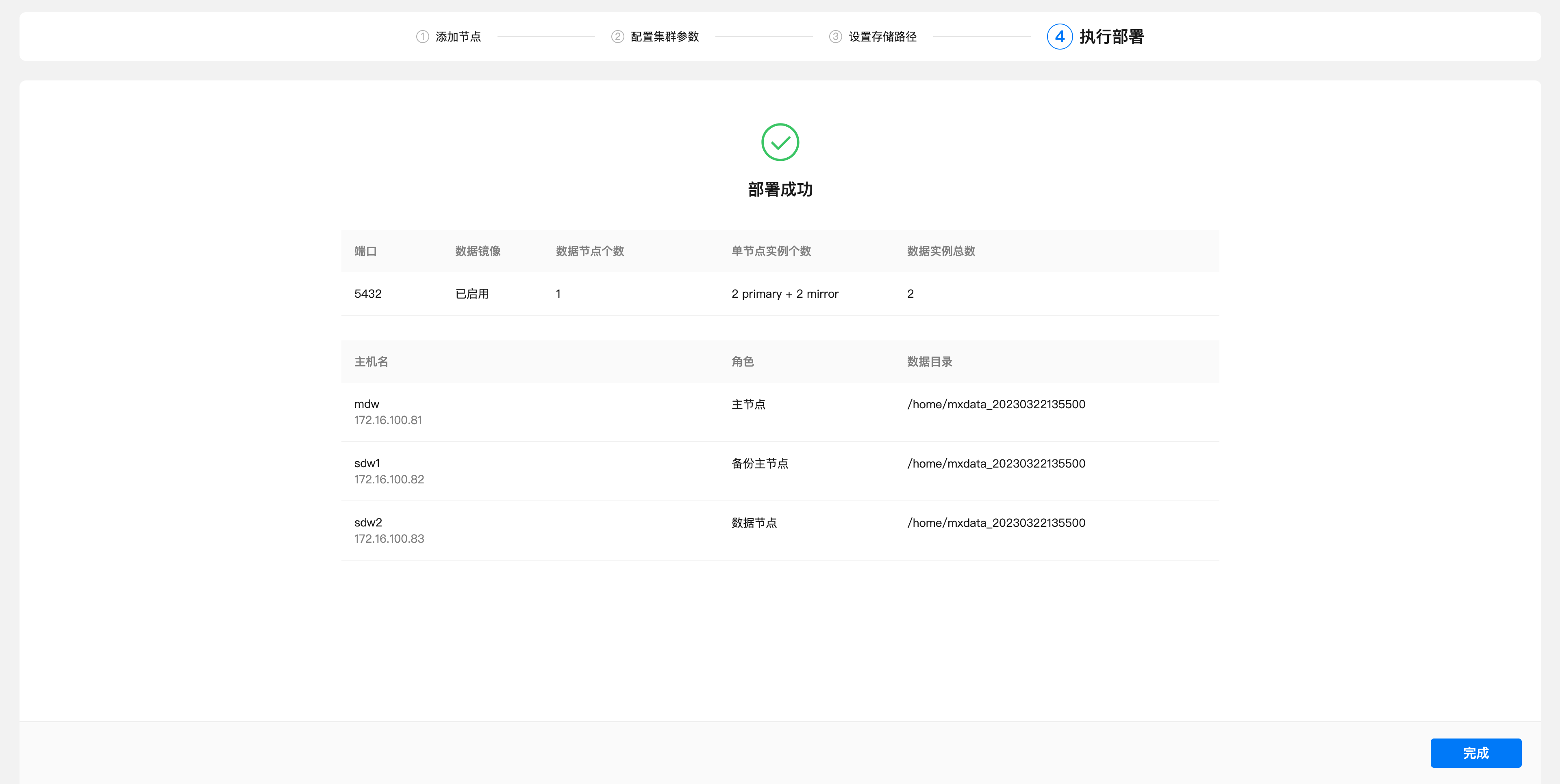

The system will then automatically deploy the cluster and list detailed steps and execution progress. After all the steps are successfully executed, it means that the deployment is completed.

Complete the deployment.

YMatrix default installation supports remote connections. If "Allow remote connection to database" is not checked during the installation process, please manually modify the $MASTER_DATA_DIRECTORY/pg_hba.conf file to add a line like this, indicating that users from any IP who access all databases are allowed to connect through password authentication. The IP range or database name can be limited according to actual needs to be used to reduce security risks:

# host all all 0.0.0.0/0 md5After completing these modifications, you need to execute the following command to reload the pg_hba.conf configuration file:

$ mxstop -uThe start, stop, restart and status viewing of YMatrix can be completed by the following commands:

$ mxstart -a

$ mxstop -af

$ mxstop -arf

$ mxstate -s| Command | Purpose |

|---|---|

| mxstop -a | Stop the cluster. (In this mode, if there is a session link, closing the database will be stuck) |

| mxstop -af | Quickly shut down the cluster |

| mxstop -arf | Restart the cluster. Wait for the currently executing SQL statement to end (in this mode, if there is a session link, closing the database will be stuck) |

| mxstate -s | View cluster status |