This section uses a real scenario to tell you how to create a timing table in MatrixDB: device table and metric table.

Please refer to [MatrixDB Data Modeling and Spatial Distribution Model] for the teaching video of this course (https://www.bilibili.com/video/BV133411r7WH?share_source=copy_web)

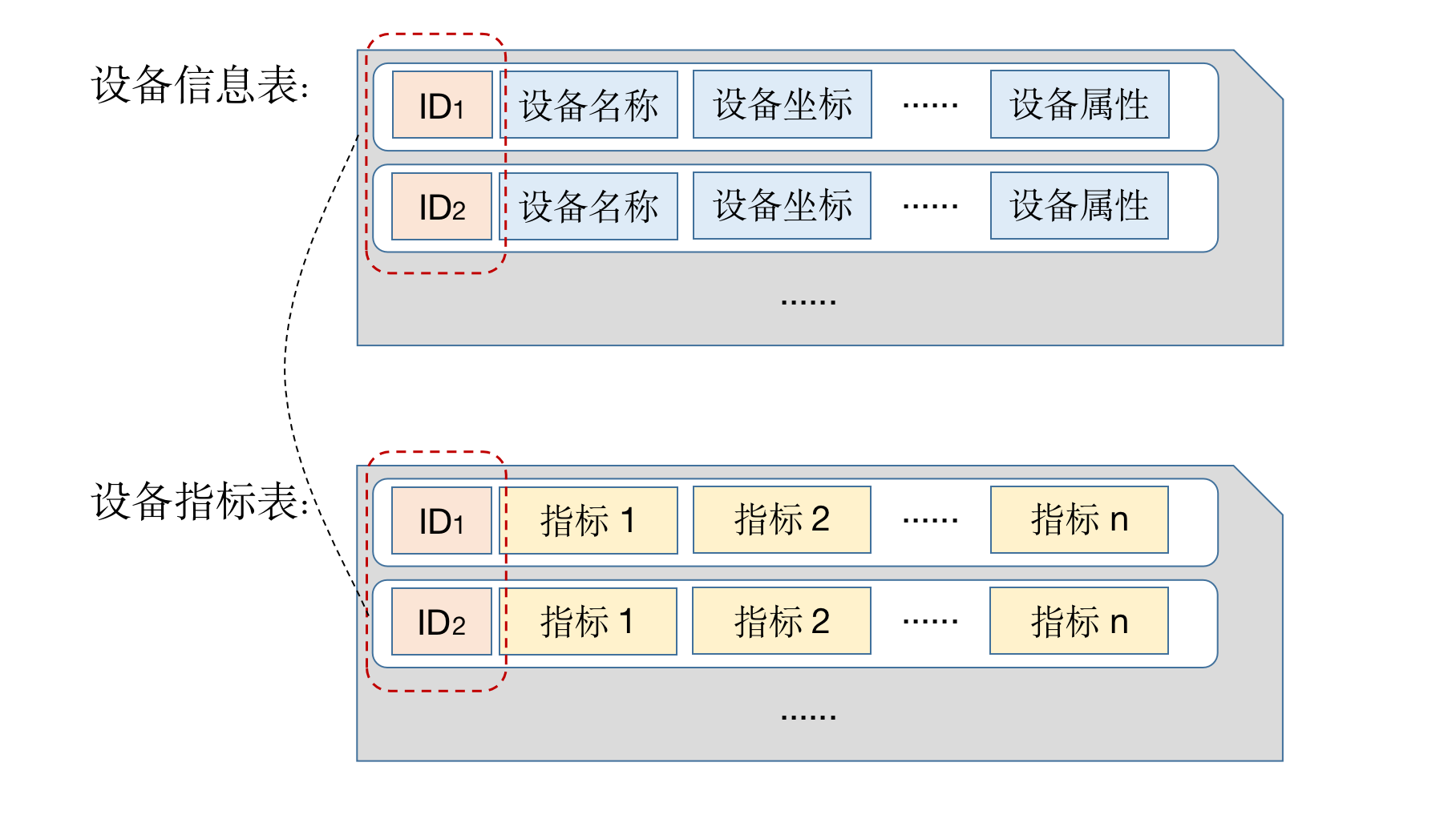

In the scene, the speed, temperature and humidity of the fan should be collected. Fan equipment includes attributes such as name, number, area, coordinates, etc.

| Field | Type | Description |

|---|---|---|

| device_id | int | device_id is the unique id of the fan, and use the auto-increment primary key (SERIAL type) |

| name | text | fan name |

| serial_number | text | fan serial number |

| region | text | Flower belongs to the area |

| longitude | float4 | fan longitude |

| latitude | float4 | fan latitude |

| Field | Type | Description |

|---|---|---|

| device_id | int | fan id |

| time | timestamp | timestamp of time series data |

| speed | float4 | fan speed |

| temperature | float4 | fan temperature |

| humidity | float4 | fan humidity |

Like other databases, in MatrixDB, data tables are organized by libraries, so first create the database stats.

You can connect to the default database postgres and then create it using CREATE DATABASE:

[mxadmin@mdw ~]$ psql postgres

psql (12)

Type "help" for help.

postgres=# CREATE DATABASE stats;

CREATE DATABASEYou can also directly create using MatrixDB's command line tool createb:

[mxadmin@mdw ~]$ createdb statsAfter creating the database, you have to connect it to use it. In MatrixDB, each connection can only belong to one fixed library.

You can connect to the database parameters by executing the psql command:

[mxadmin@mdw ~]$ psql stats

psql (12)

Type "help" for help.

stats=#You can also switch to the connection to the new database from other database connections using the \c meta command:

postgres=# \c stats

You are now connected to database "stats" as user "mxadmin".After connecting to the database, start creating data tables:

CREATE TABLE device(

device_id serial,

name text,

serial_number text,

region text,

longitude float4,

latitude float4

)

Distributed replicated;CREATE TABLE metrics(

time timestamp with time zone,

device_id int,

speed float4,

temperature float4,

humidity float4

)

Distributed by (device_id);From the above table creation statement, you will find that the distribution methods of the two tables are different.

device table is used as Distributed replicatedmetrics is used as Distributed by (device_id)The distribution method of tables is introduced later. The following is a unique index for the device table:

Because the device information in the device table must be unique, it is necessary to establish a unique index for the information identifying the unique attributes of the device to facilitate the application to maintain the device information. Here it is assumed that the device number is used to mark the uniqueness of the device, so a unique index is established as follows:

CREATE UNIQUE INDEX ON device(serial_number);After creating the table, the following will introduce simple data writing and query operations.

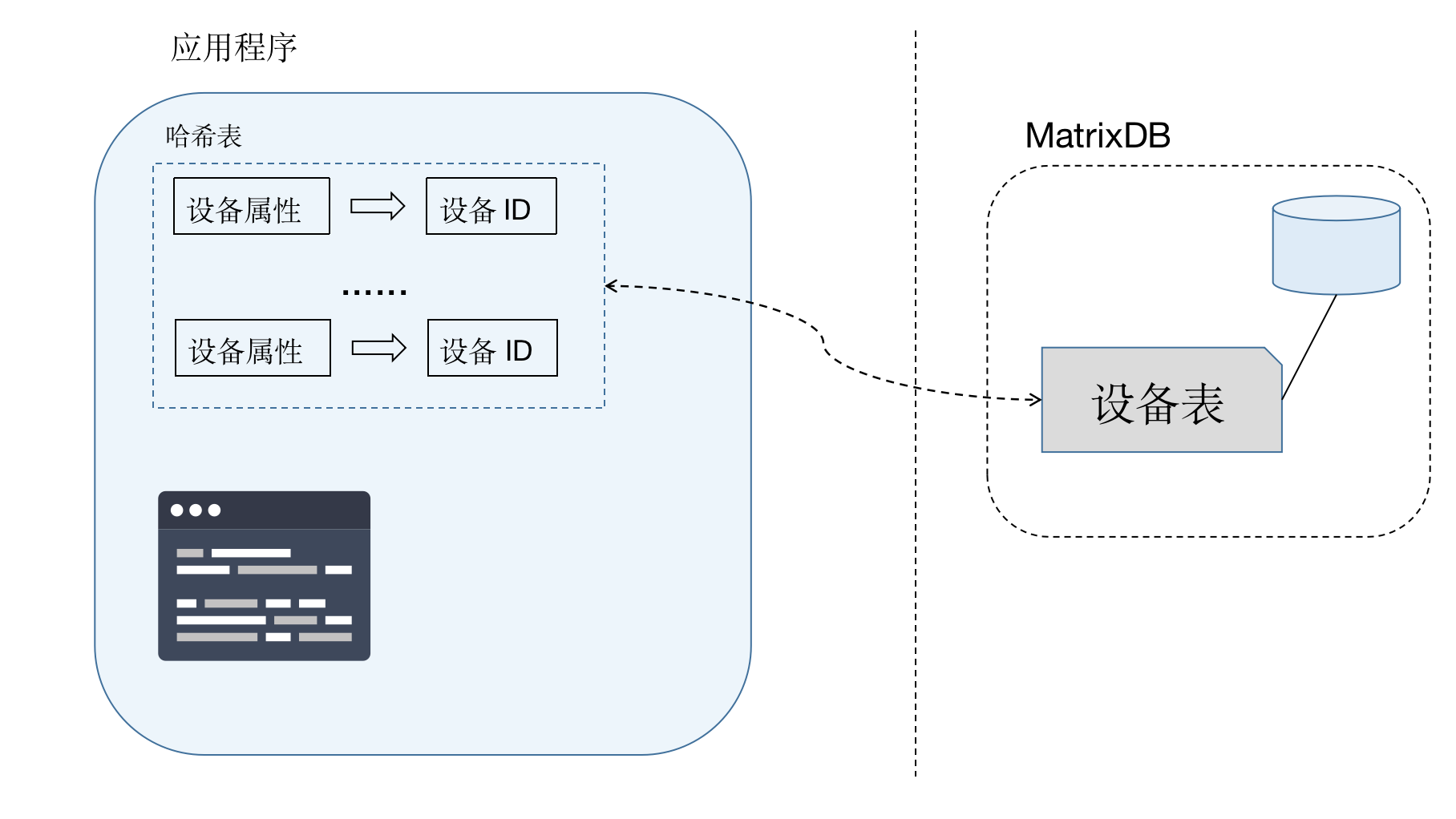

When the application party is accessing the indicator data, it needs to follow the following steps:

So how do you convert the device ID into an ID? The device ID is not an attribute of the device itself and will not be sent with the indicator data. It needs to be converted by the application party.

The easiest way is to check the library according to the device number every time, and read it directly if it exists; insert a new device and generate an ID if it does not exist.

This method requires that each metric be checked before inserting it, greatly increasing the database load and affecting throughput. Therefore, the recommended approach is to maintain a memory hash table by the Internet of Things gateway or Kafka consumer program. When each piece of data arrives, query the device ID from the memory hash table. If it exists, it will be used directly; if it does not exist, it will be inserted into the library to obtain the device ID and update the hash table.

Since the device information and indicator data are stored separately in two tables. When doing query, if you want to obtain both indicator statistics and device information, you need to make connections based on the device ID.

The following SQL statistics on the average temperature, average humidity and maximum speed of each fan on '2021-07-01':

SELECT device.name,

AVG(metrics.temperature),

AVG(metrics.humidity),

MAX(metrics.speed)

FROM device JOIN metrics USING (device_id)

WHERE metrics.time >= '2021-07-01'

AND metrics.time < '2021-07-02'

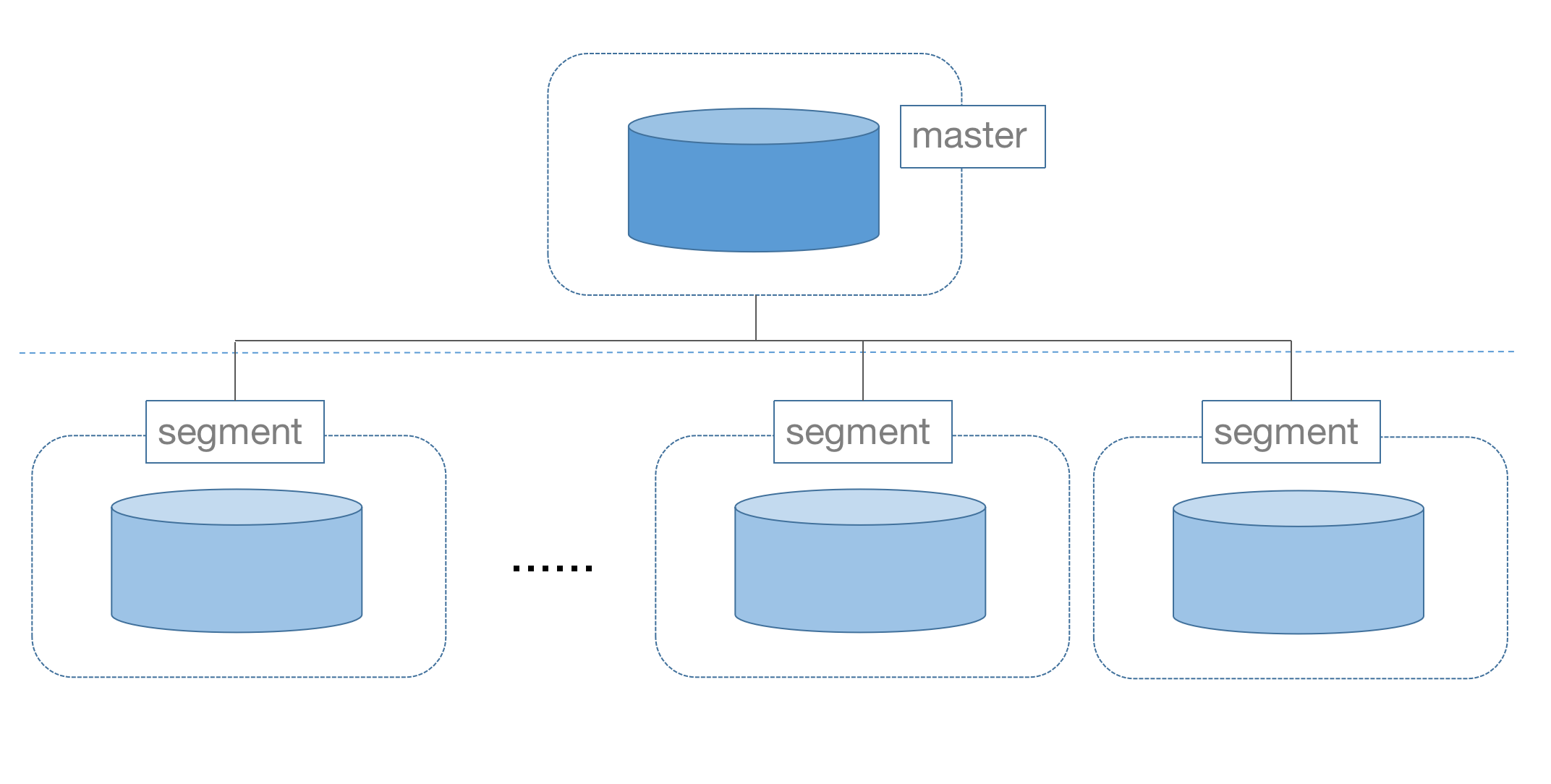

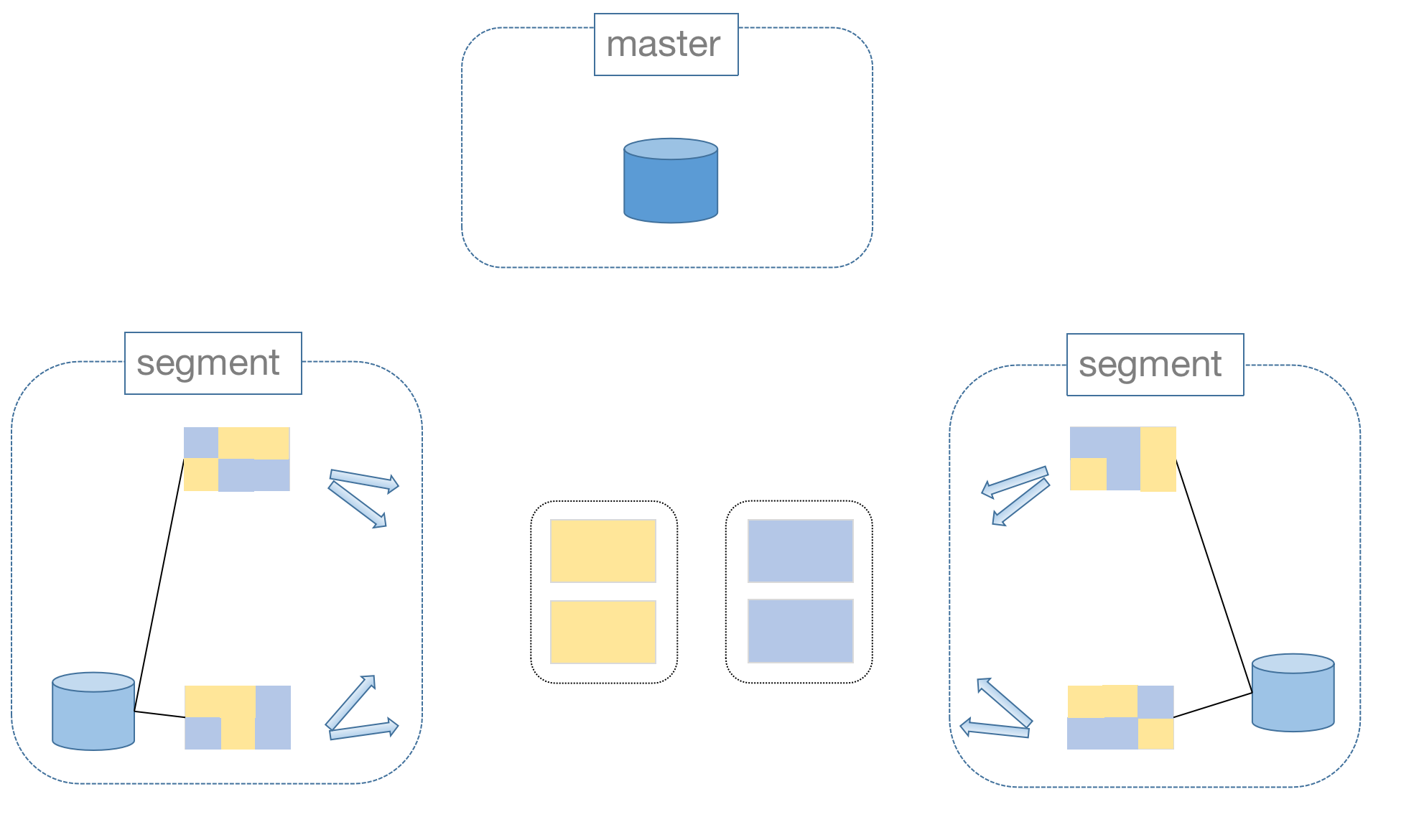

GROUP BY device.device_id, device.name;MatrixDB is a centralized distributed database, including two types of nodes: master and segment.

The master is a central control node that receives user requests and generates query plans. The master node does not store data, and the data is stored on the segment node.

The master is a central control node that receives user requests and generates query plans. The master node does not store data, and the data is stored on the segment node.

There is only one master node, and at least one segment node, which can be more, dozens or even hundreds. The more segment nodes, the more data the cluster can store and the stronger the computing power.

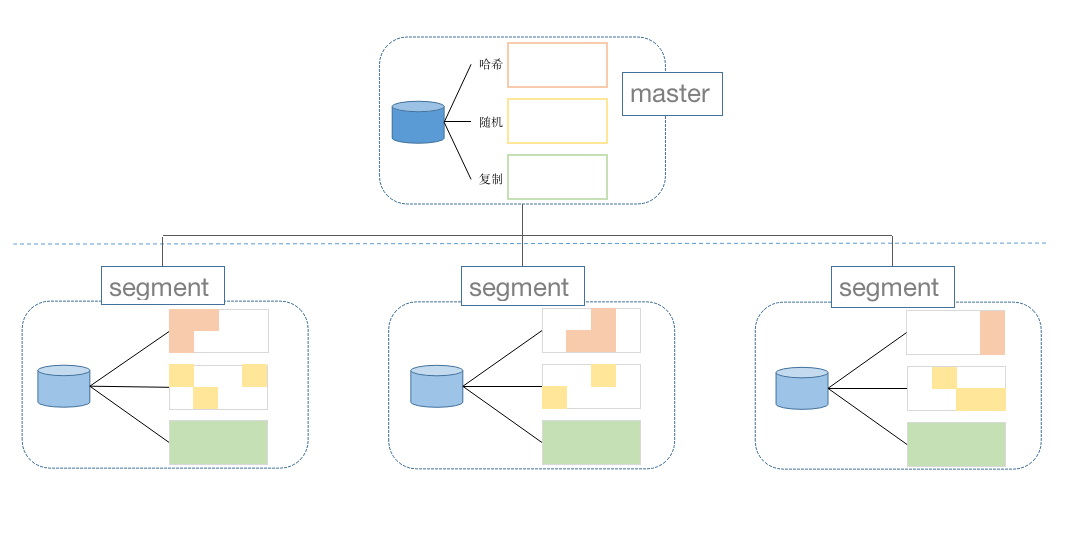

MatrixDB data table has two distribution strategies on segment, sharding and redundancy; sharding is divided into two types of hashing and random sharding:

The shard distribution is to split the data horizontally, and a piece of data is stored on only one of the nodes. Hash shards need to define hash keys, which can be one or more. The database calculates the key value for the hash key to determine which node to store on. Random sharding randomly allocates data to a node.

The redundant distribution stores data redundancy on each data node, that is, each data node contains all the data in the table. This method will occupy a lot of storage space, so only small tables that often require connection operations use redundant distribution.

In summary, there are 3 strategies for data distribution, and different distribution strategies. When creating tables, you can set them through the Distributed by keyword:

MatrixDB under a distributed architecture, most data tables are stored in shards, but can support all relationship queries. From the user's perspective, it is PostgreSQL with infinite space.

So, how to do data connections across nodes? This is thanks to MatrixDB's Motion operation, when the data that meets the connection conditions are not on the same node, they will be moved to the same node for connection.

Of course, there is a cost to do mobile operations. Therefore, when designing a table, we must fully consider the data distribution characteristics and the query types to be conducted later to comprehensively determine the distribution strategy.

Movement operations can only be avoided if the data that meets the connection conditions are completely distributed on the same node. Therefore, it is often necessary to do large tables for joining operations, and it is best to set the joining key to the distribution key.

After understanding the distribution method and pros and cons of MatrixDB data, let’s discuss which distribution strategy should be adopted in the timing data table.

First, let’s consider the indicator table. The amount of indicator data is very large, and it is impossible to adopt a redundant distribution method. There is no pattern in the random distribution, which is not conducive to the subsequent statistical analysis, so hash distribution is the first choice. Then how to determine the distribution bond?

The timing table data contains 3 dimensions:

The subsequent statistical analysis basically uses other attributes of the device and connected to the device table as grouping keys, so the device ID is used as the distribution key.

The scale of the device table data is relatively fixed and will not grow infinitely like the index data, so the redundant distribution method is generally adopted. In this way, there is no need to move data across nodes when connecting to the indicator table.