MatrixDB provides a native Grafana-based graphical monitoring tool. This document describes the steps to deploy and manage the monitoring components. MatrixDB includes a default monitoring dashboard that displays the following information. Users can also create custom dashboards in Grafana using the collected system data.

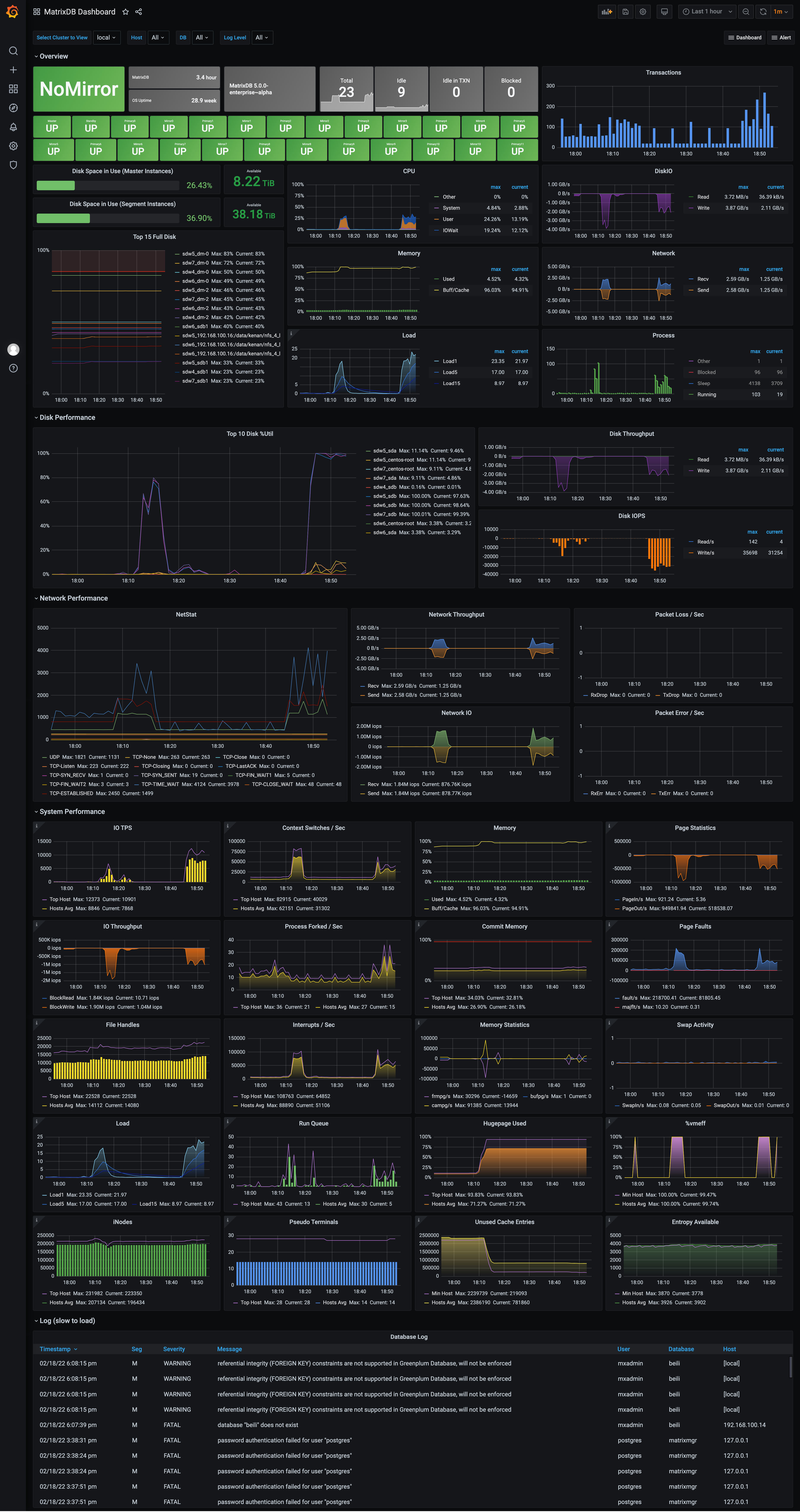

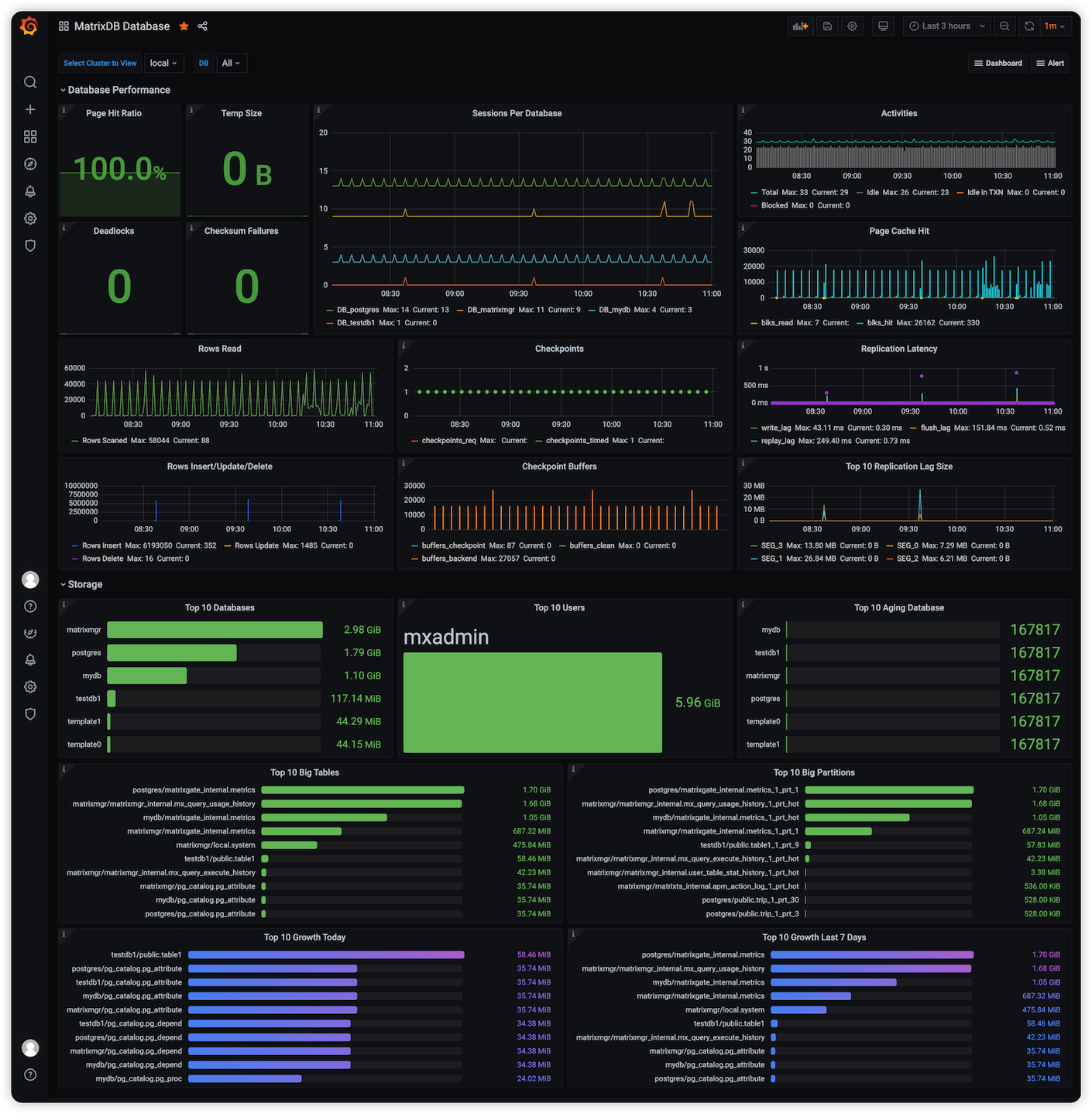

The following figures show the monitoring dashboards after deployment. Starting from version 4.4, monitoring is split into two separate dashboards: MatrixDB Dashboard and MatrixDB Database.

MatrixDB Dashboard:

MatrixDB Database:

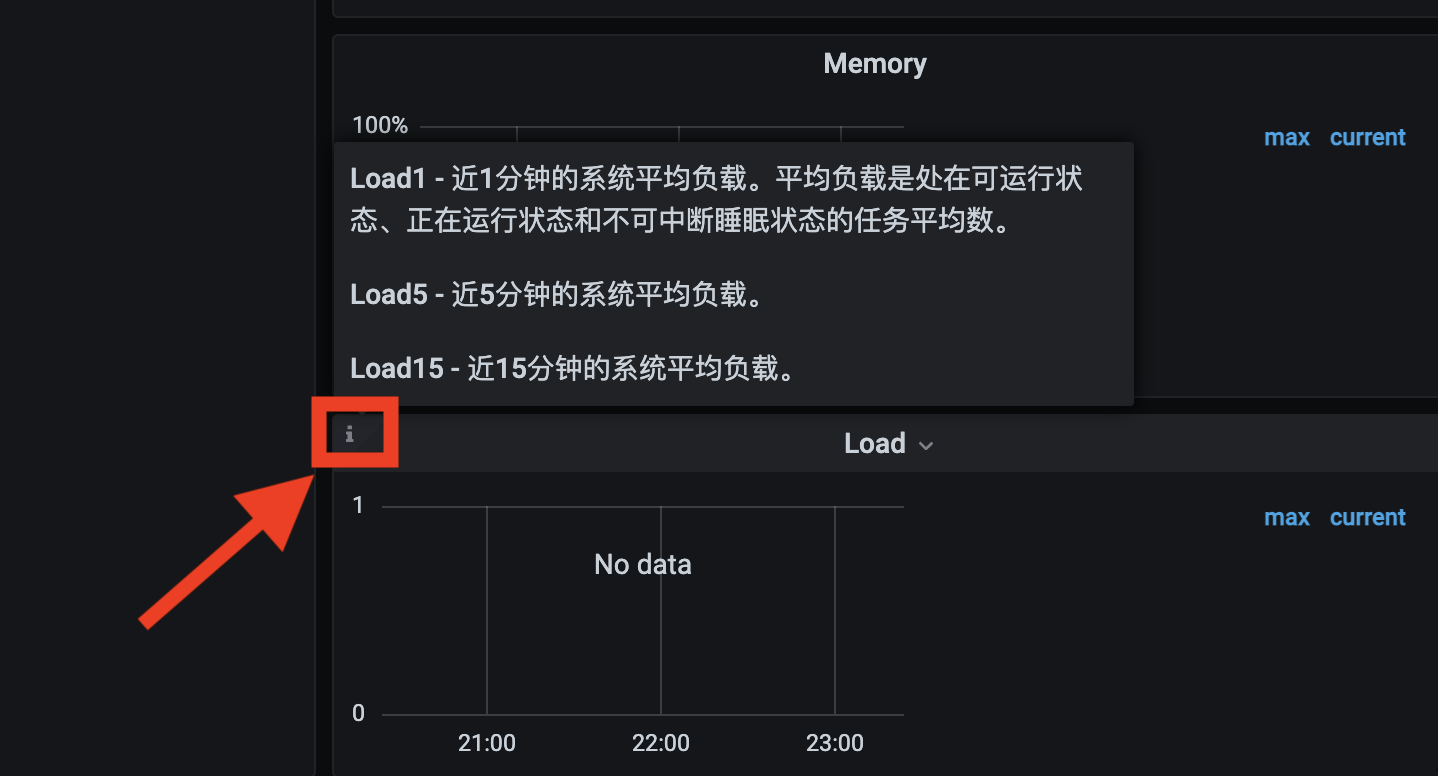

Descriptions have been added for panels that may be difficult to understand. Hover the mouse over the icon in the upper-left corner of a panel to view the description:

Monitoring components are included in the MatrixDB installation package. After deploying MatrixDB, complete the monitoring setup in two steps: enabling metric collection and installing and configuring Grafana.

Perform the following steps to enable metric collection for MatrixDB and system resource usage. Collected data will be stored in a newly created database named matrixmgr.

createdb matrixmgr;psql -d matrixmgr

matrixmgr=# CREATE EXTENSION matrixts;

matrixmgr=# CREATE EXTENSION matrixmgr;

matrixmgr=# SELECT mxmgr_init_local();After successful execution, a new schema named local will appear under the matrixmgr database. Tables and views in this schema contain cluster monitoring and configuration information. Do not modify the definitions or contents of these tables and views manually.

Prepare a host that can access both the MatrixDB Master node and the Internet. This can be the Master, Standby Master, or a separate machine (Linux, macOS, Windows, etc.).

Install Grafana, version 7.3 or later is recommended. The official download link is https://grafana.com/grafana/download.

The commands below use CentOS 7 as an example. For other operating systems, refer to the corresponding documentation.

Note!

YMatrix supports offline installation of Grafana. See 6 Monitoring - FAQ 1 for details.

wget https://dl.grafana.com/oss/release/grafana-7.3.6-1.x86_64.rpm

sudo yum install grafana-7.3.6-1.x86_64.rpmsudo systemctl daemon-reload

sudo systemctl start grafana-server

sudo systemctl status grafana-server

sudo systemctl enable grafana-serverNotes:

yum in CentOS 7 is often outdated (version 6.x), so sudo yum install grafana is not recommended. After installation, open a browser and navigate to the following URL. Port 3000 is the default Grafana port and can be changed. Log in with the default credentials (admin/admin). For security, change the password after logging in by clicking the user icon in the lower-left corner.

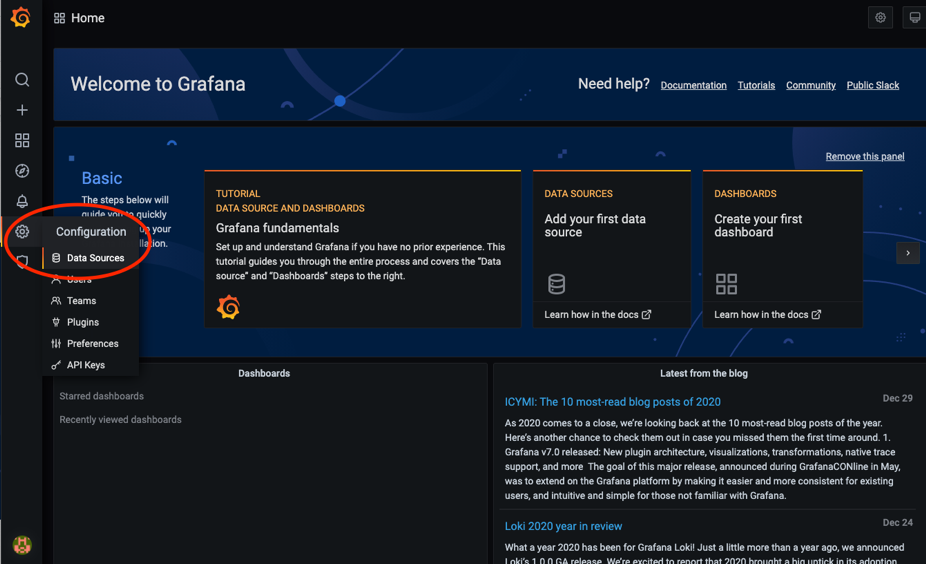

http://<IP_or_hostname_of_Grafana_host>:3000After Grafana is installed, add the matrixmgr database in MatrixDB as a data source and import the predefined monitoring dashboards.

To add a data source:

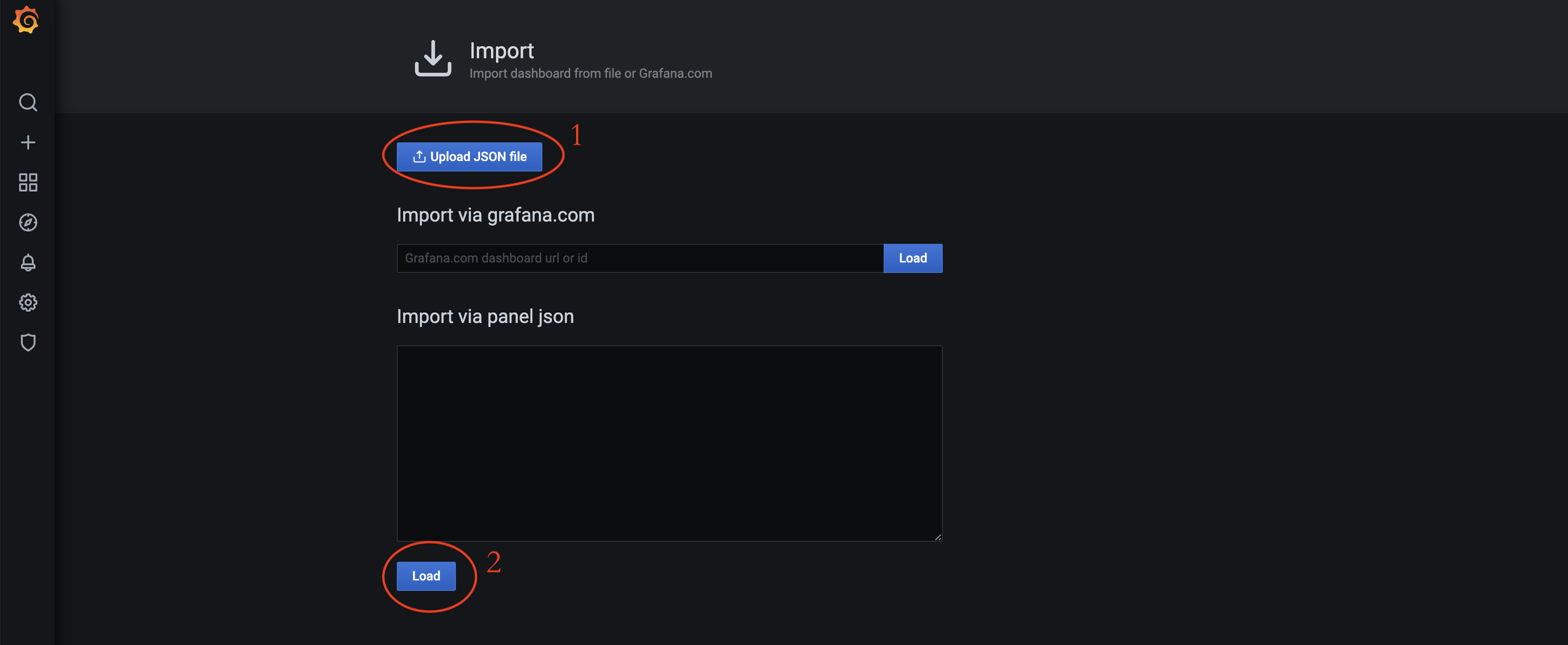

Before importing the predefined dashboards, copy the dashboard.json and database.json files to your local machine. The process is as follows: locate the files on the server, copy them, and then upload them locally. Follow these steps:

First, log in to the server and switch to the mxadmin user. Locate the dashboard.json and database.json files in the path shown below. You can use the cd command, find command, or any other method you prefer. The example below uses find for database.json.

[mxadmin@mdw ~]$ cd /usr/local/matrixdb/share/doc/postgresql/extension

##or

[mxadmin@mdw ~]$ find /usr/local/matrixdb/share/doc/postgresql/extension -name database.jsonNext, use the scp command to copy the files to your local machine. Permission issues may arise, so consider copying the file to a public directory such as /tmp/ first, then transferring it from /tmp/ to your local machine. The example below uses database.json.

Note!

When copying from/tmp/, ensure you switch users appropriately to avoid permission issues.

[mxadmin@mdw]$ scp mxadmin@<server_IP>:/usr/local/matrixdb/share/doc/postgresql/extension/"database.json" mxadmin@<server_IP>:/tmp/

~ scp waq@<server_IP>:/tmp/"database.json" /Users/akkepler/workplace/GrafanaFinally, verify the files are successfully copied by opening the local directory or using command-line tools. After copying:

dashboard.json, replace all instances of ${cluster} with local, and replace $host with the actual hostnames. For example, if your cluster consists of mdw, sdw1, and sdw2, replace all $host occurrences with 'mdw','sdw1','sdw2'.database.json, only replace ${cluster} with local.After modifications, import the JSON files through the Grafana web interface.

After enabling cluster metric collection, each host runs a collection service. Related logs are stored in the /var/log/matrixdb directory.

If MatrixDB is restarted, or the host is rebooted and MatrixDB is restarted, the metric collection service starts automatically without manual intervention.

To stop the metric collection service, connect to the matrixmgr database and run the mxmgr_remove_all command. Collected data remains preserved after stopping:

psql -d matrixmgr

matrixmgr=# SELECT mxmgr_remove_all('local');If the collection service is manually stopped or MatrixDB is reinstalled, re-enable metric collection by connecting to the matrixmgr database and running the mxmgr_deploy command:

matrixmgr=# SELECT mxmgr_deploy('local');