This document introduces the cluster health monitoring function of the graphic interface.

When supporting daily business, YMatrix database will run a large number of SQL statements, which may cause hardware problems such as network failures, lock waiting caused by transaction concurrency, etc. If not processed in time, it will lead to slow response speed or even direct errors, which will affect the efficiency of business operation. In order to better deal with the above problems, the health monitoring function of the graphic interface can help you discover abstract performance of the database cluster faster.

Health monitoring will regularly check the corresponding database system tables based on different detection items to check whether the operating status of the query meets business expectations. Once it is found that the expected status does not meet, we will immediately send a notification. The notification can be viewed in the graphic interface. If you think it is inconvenient to always view the page, you can also choose to receive alarm information more timely by email notification.

Enter the IP of the machine where MatrixGate is located (the IP of Master by default) and port number in the browser to log in to the graphic interface.

http://<IP>:8240After successfully logging in, enter the Health Monitoring page.

You can choose whether to configure your email account as needed. Once the email account is configured, you will receive email notifications.

You can choose whether to configure your email account as needed. Once the email account is configured, you will receive email notifications.

Graphical Interface Domain Name

To facilitate timely access to detailed information about alert events, we will include a link in the email that redirects to the graphical interface. If the email recipient cannot access the default domain name, they must modify the content of this field.

SMTP Server Address

The SMTP server address consists of an IP address and a port number. Example: smtp.example.com:465.

Common third-party email servers

Notes!

If the email service is set up by the enterprise itself, consult the email administrator or email service provider.

Username

The account used for authentication on the SMTP server. This field is optional and only required when the SMTP server requires a username for authentication. Example: [email protected].

Password

The password for the SMTP username. This field is optional and only required when the SMTP server requires both a username and password for authentication.

Notes!

If the email service is set up by the company itself, please consult the email administrator or email service provider.

Sender

If using a third-party email service, this field should be consistent with the “username” content;

if using a self-built email service, just fill in the sender's email address.

Recipient

Enter the recipient's email address; multiple addresses can be entered.

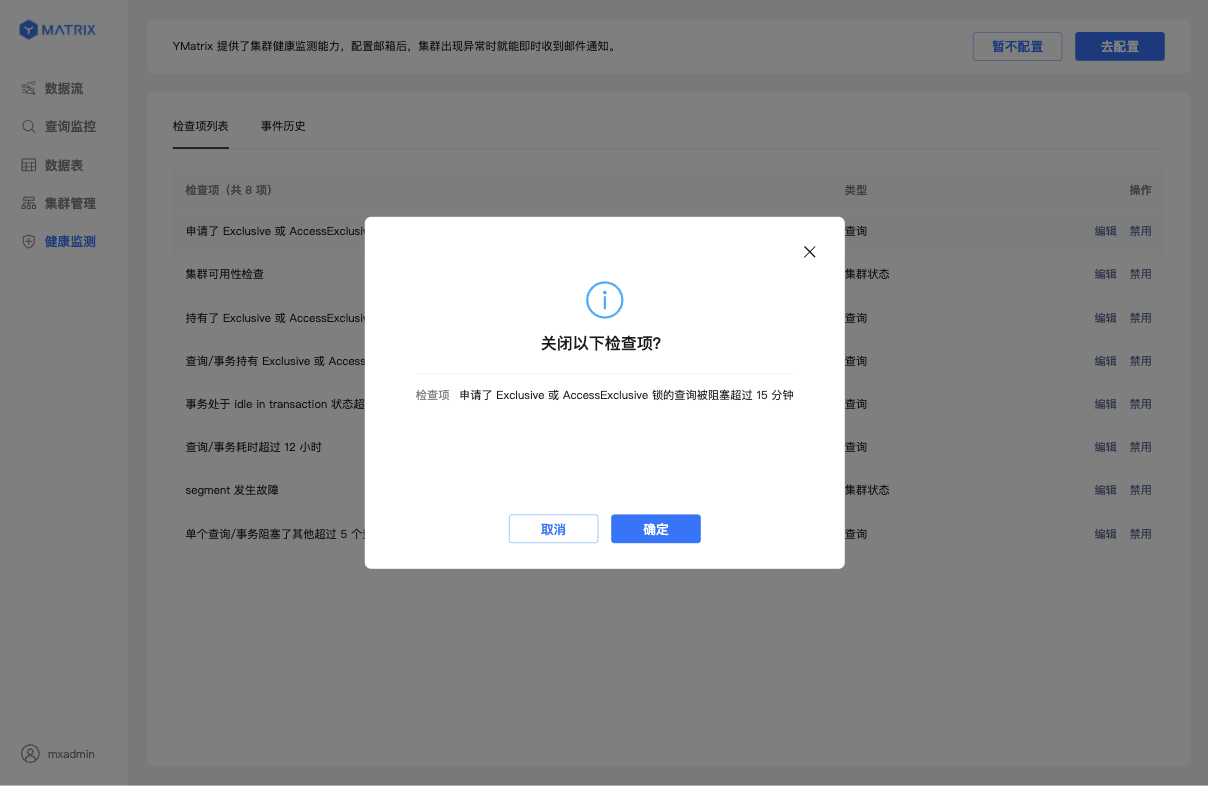

The list below shows the monitoring items currently provided by YMatrix, which are enabled by default. You can enable them as needed.

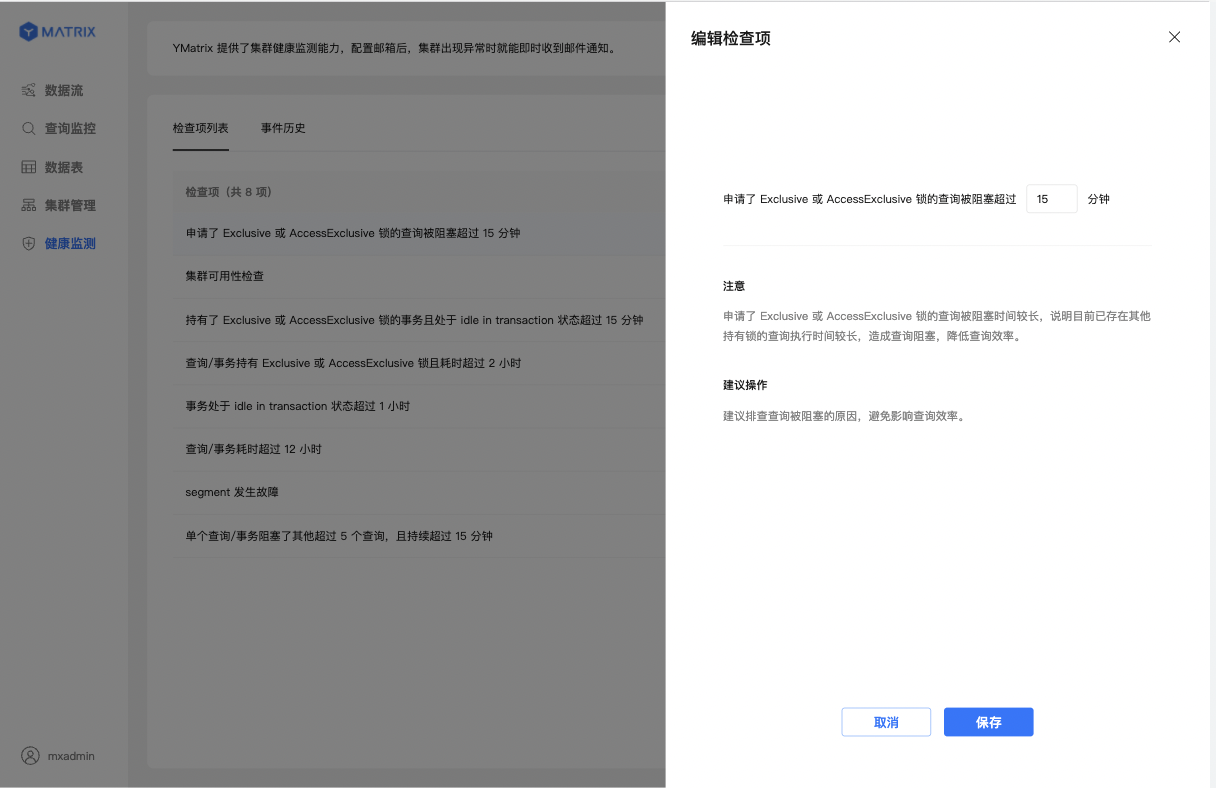

If you believe the default parameters for the monitoring items do not align with your business scenario, you can modify them accordingly.

| Serial number | Detection items | Instructions |

| 1 | Cluster not available | Verify whether the cluster is available by periodically executing the query SELECT * FROM gp_dist_random('gp_id'); If the query fails three times in a row, it is likely that the cluster has crashed, which may be the main Segment, corresponding mirror Segment simultaneous failure, network failure, power failure, hardware failure, etc. |

| 2 | Segment failed | The main segment failure will cause the corresponding mirror segment server resources to be tilted. The processing pressure of the machine where the mirror segment data is located will increase, and the query speed will slow down. In severe cases, it may cause the memory resources of the tilted node to be exhausted and the cluster will be unavailable. The presence of a mirrored segment failure will cause the cluster to be less highly available. Once the corresponding primary segment fails, the cluster will be unavailable |

| 3 | Query/transaction takes more than 12 hours | If the query/transaction takes too long, it may occupy a large amount of memory, CPU and other server resources, causing the database service response to slow down and the system triggers OOM (memory overflow), etc.; in addition, it may cause the VACUUM process to be delayed |

| 4 | The transaction is in idle in transaction state for more than 1 hour | The transaction is in idle in transaction state for a long time, and most queries with tables involved in the transaction will be blocked, which will also prevent the VACUUM process from reclaiming records, causing table data to bloat |

| 5 | Single query/transaction blocks more than 5 other queries and lasts for more than 15 minutes | Query/transaction blocks many other queries, and the blocking time is long, which can easily cause other statements to block each other, affecting service response efficiency |

| 6 | The query that applied for Exclusive or AccessExclusive lock was blocked for more than 15 minutes | Ques for table-level Exclusive or AccessExclusive locks are blocked for a long time, which may cause query blocking and accumulation, affecting service response efficiency |

| 7 | Query/transaction holds Exclusive or AccessExclusive locks and takes more than 2 hours | Query/transaction holds table-level Exclusive or AccessExclusive locks and takes a long time, which will cause all queries involving locked tables to be blocked, affecting service response efficiency |

| 8 | Transactions that hold Exclusive or AccessExclusive locks and are in idle in transaction status for more than 15 minutes | If the transaction holds an Exclusive or AccessExclusive lock and is in the idle in transaction state for 15 minutes, most of the queries with the tables involved in the transaction will be blocked, affecting the service response efficiency |

If you have configured an email account, you will receive an email when an event that meets the detection item failure conditions occurs.

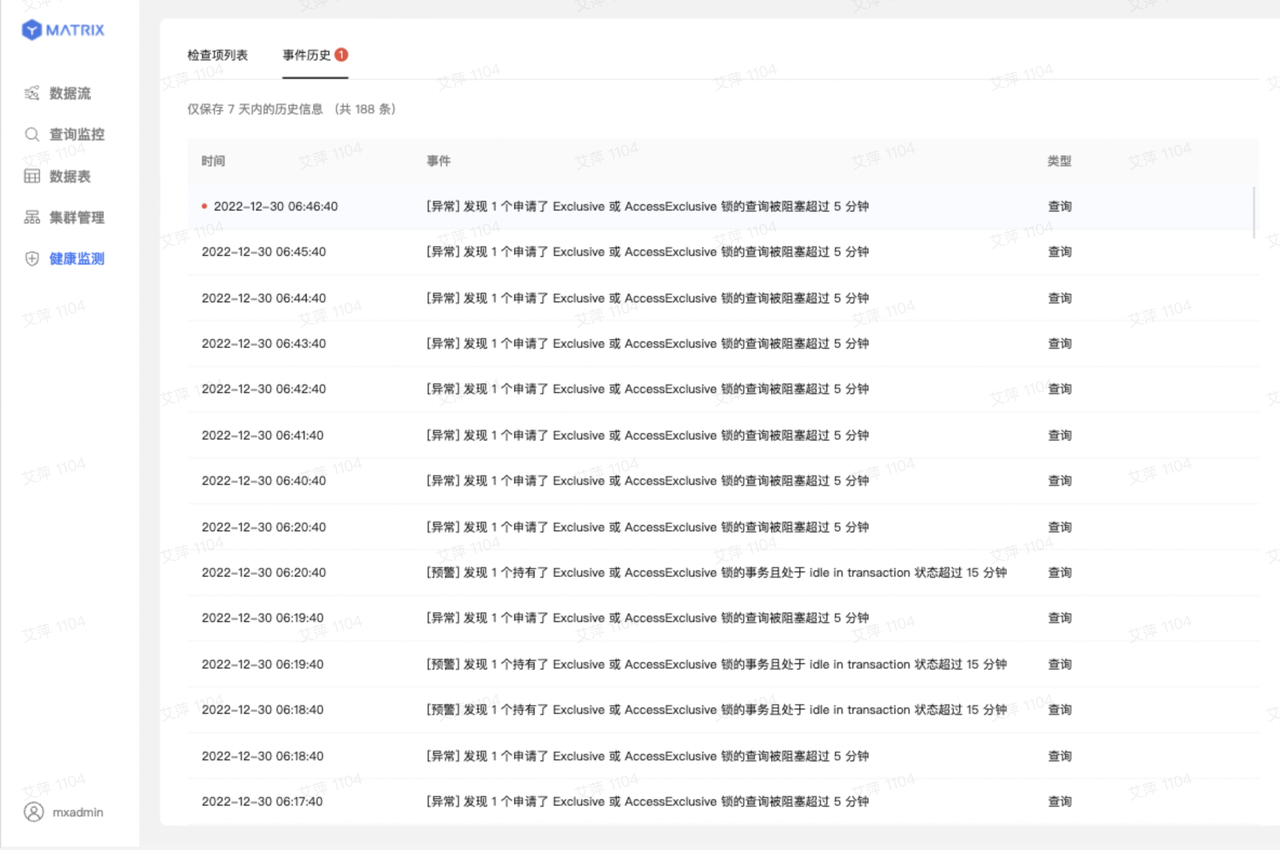

Regardless of whether you have configured an email address, you can view records of events that occurred in the cluster and met the fault conditions of the detection items in the “Event History” section.